腾讯云环境K8S安装及配置测试

目录

1. k8s集群系统规划

1.1. kubernetes 1.10的依赖

k8s V1.10对一些相关的软件包,如etcd,docker并不是全版本支持或全版本测试,建议的版本如下:

- docker: 1.11.2 to 1.13.1 and 17.03.x

- etcd: 3.1.12

- 全部信息如下:

- The supported etcd server version is 3.1.12, as compared to 3.0.17 in v1.9 (#60988)

- The validated docker versions are the same as for v1.9: 1.11.2 to 1.13.1 and 17.03.x (ref)

- The Go version is go1.9.3, as compared to go1.9.2 in v1.9. (#59012)

- The minimum supported go is the same as for v1.9: go1.9.1. (#55301)

- CNI is the same as v1.9: v0.6.0 (#51250)

- CSI is updated to 0.2.0 as compared to 0.1.0 in v1.9. (#60736)

- The dashboard add-on has been updated to v1.8.3, as compared to 1.8.0 in v1.9. (#57326)

- Heapster has is the same as v1.9: v1.5.0. It will be upgraded in v1.11. (ref)

- Cluster Autoscaler has been updated to v1.2.0. (#60842, @mwielgus)

- Updates kube-dns to v1.14.8 (#57918, @rramkumar1)

- Influxdb is unchanged from v1.9: v1.3.3 (#53319)

- Grafana is unchanged from v1.9: v4.4.3 (#53319)

- CAdvisor is v0.29.1 (#60867)

- fluentd-gcp-scaler is v0.3.0 (#61269)

- Updated fluentd in fluentd-es-image to fluentd v1.1.0 (#58525, @monotek)

- fluentd-elasticsearch is v2.0.4 (#58525)

- Updated fluentd-gcp to v3.0.0. (#60722)

- Ingress glbc is v1.0.0 (#61302)

- OIDC authentication is coreos/go-oidc v2 (#58544)

- Updated fluentd-gcp updated to v2.0.11. (#56927, @x13n)

- Calico has been updated to v2.6.7 (#59130, @caseydavenport)

1.2 测试服务器准备及环境规划

| 服务器名 | IP | 功 能 | 安装服务 |

|---|---|---|---|

| sh-saas-cvmk8s-master-01 | 10.12.96.3 | master | master,etcd |

| sh-saas-cvmk8s-master-02 | 10.12.96.5 | master | master,etcd |

| sh-saas-cvmk8s-master-03 | 10.12.96.13 | master | master,etcd |

| sh-saas-cvmk8s-node-01 | 10.12.96.2 | node | node |

| sh-saas-cvmk8s-node-02 | 10.12.96.4 | node | node |

| sh-saas-cvmk8s-node-03 | 10.12.96.6 | node | node |

| bs-ops-test-docker-dev-04 | 172.21.248.242 | 私有镜像仓库 | harbor |

| VIP | 10.12.96.100 | master vip | netmask:255.255.255.0 |

netmask都为:255.255.255.0

所有的测试服务器安装centos linux 7.4最新版本.

VIP:10.12.96.100只是用于keepalived的测试,实际本文使用的是腾讯云LB+haproxy的模式,使用的腾讯云LB VIP为:10.12.16.101

容器网段:10.254.0.0/16 容器网段需要避免这些冲突:

- 同vpc的其它集群的集群网络cidr

- 所在vpc的cidr

- 所在vpc的子网路由的cidr

- route-ctl list 能看到的所有route table 的 cidr 容器网段不要在VPC内创建,也要不在VPC的路由表内,使用一个VPC内不存在的网络。

k8s service cluster网络:10.254.255.0/24

1.3 云扩展控制器tencentcloud-cloud-controller-manager

本次测试使用腾讯云主机CVM,网络使用VPC,其中k8s master及node在一个子网:10.12.96.0/24。

tencentcloud-cloud-controller-manager为腾讯云推出的k8s云扩展控制器,网址为:https://github.com/dbdd4us/tencentcloud-cloud-controller-manager

tencentcloud-cloud-controller-manager配置说明参考:https://github.com/dbdd4us/tencentcloud-cloud-controller-manager/blob/master/README_zhCN.md

tencentcloud-cloud-controller-manager在当前版本主要是解决了K8S上的容器可以直接使用VPC网络的问题,使得VPC内其它CVM主机可直接访问K8S内的容器。

tencentcloud-cloud-controller-manager对K8S及相关资源的要求如下:

- k8s node名必须为ip

- 需访问api server为非https地址

- 容器网段需用route-ctl创建,而不是在腾讯云控制台,不过创建完后,应该要在腾讯云VPC内看到辅助网段。

- kubernets 1.10.x以上

- Docker 1.7.06以上

2. k8s集群安装前的准备工作

2.1 操作系统环境配置

在所有的服务器上操作:

- 修改主机名

[root@VM_96_3_centos ~]# hostnamectl set-hostname sh-saas-cvmk8s-master-01

[root@VM_96_3_centos ~]# hostnamectl --pretty

[root@VM_96_3_centos ~]# hostnamectl --static

sh-saas-cvmk8s-master-01

[root@VM_96_3_centos ~]# hostnamectl --transient

sh-saas-cvmk8s-master-01

[root@VM_96_3_centos ~]# cat /etc/hostname

sh-saas-cvmk8s-master-01

以上只修改了一台服务器,在其它服务器上执行相应的操作修改主机名。

- 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

- 关闭Swap

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

- 关闭SELinux

setenforce 0

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

- 开启网桥转发

modprobe br_netfilter

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

ls /proc/sys/net/bridge

- 配置K8S的yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

- 安装依赖包

yum install -y epel-release

yum install -y yum-utils device-mapper-persistent-data lvm2 net-tools conntrack-tools wget vim ntpdate libseccomp libtool-ltdl

- 安装bash命令提示

yum install -y bash-argsparse bash-completion bash-completion-extras

- 配置ntp

腾讯云由于原生已带有ntpd服务,此步略过。

systemctl enable ntpdate.service

echo '*/30 * * * * /usr/sbin/ntpdate time7.aliyun.com >/dev/null 2>&1' > /tmp/crontab2.tmp

crontab /tmp/crontab2.tmp

systemctl start ntpdate.service

- 调整文件打开数等配置

echo "* soft nofile 65536" >> /etc/security/limits.conf

echo "* hard nofile 65536" >> /etc/security/limits.conf

echo "* soft nproc 65536" >> /etc/security/limits.conf

echo "* hard nproc 65536" >> /etc/security/limits.conf

echo "* soft memlock unlimited" >> /etc/security/limits.conf

echo "* hard memlock unlimited" >> /etc/security/limits.conf

2.2 安装docker

在所有node节点上安装docker17.06,注意:k8s v1.10经过测试的最高docker版本为17.03.x,不建议用其它版本。但是腾讯云需要17.05以上版本,所以我们使用17.06.x的版本。

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#yum-config-manager --enable docker-ce-edge

#yum-config-manager --enable docker-ce-test

#docker官方YUM安装有冲突,需要加参数:--setopt=obsoletes=0 腾讯云需要17.05以上版本

yum install -y --setopt=obsoletes=0 docker-ce-17.06.2.ce-1.el7.centos docker-ce-selinux-17.06.2.ce-1.el7.centos

vim /usr/lib/systemd/system/docker.service

#找到 ExecStart= 这一行,加上加速地址及私有镜像等,修改如下:

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock --registry-mirror=https://iw5fhz08.mirror.aliyuncs.com --insecure-registry 172.21.248.242

#需要开放不同node间通信

ExecStartPost=-/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT

#或执行以下命令修改配置文件:

sed -i "s#^ExecStart=/usr/bin/dockerd.*#ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock --iptables=false --ip-masq=false --bip=172.17.0.1/16 --registry-mirror=https://iw5fhz08.mirror.aliyuncs.com --insecure-registry 172.21.248.242#g" /usr/lib/systemd/system/docker.service

sed -i '/ExecStart=/a\ExecStartPost=-/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT' /usr/lib/systemd/system/docker.service

#完整配置文件如下:

[root@10 ~]# cat /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock --iptables=false --ip-masq=false --bip=172.17.0.1/16 --registry-mirror=https://iw5fhz08.mirror.aliyuncs.com --insecure-registry 172.21.248.242

ExecStartPost=-/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT

ExecReload=/bin/kill -s HUP $MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

#重新加载配置

systemctl daemon-reload

#启动Docker

systemctl enable docker

systemctl start docker

2.3 安装harbor私有镜像仓库

以下操作只需要172.21.248.242服务器上执行:

yum -y install docker-compose

wget https://storage.googleapis.com/harbor-releases/release-1.4.0/harbor-offline-installer-v1.4.0.tgz

mkdir /data/

cd /data/

tar xvzf /root/harbor-offline-installer-v1.4.0.tgz

cd harbor

vim harbor.cfg

hostname = 172.21.248.242

sh install.sh

#如果要修改配置:

docker-compose down -v

vim harbor.cfg

prepare

docker-compose up -d

#重启:

docker-compose stop

docker-compose start

- harbor的管理界面为:http://172.21.248.242

用户名:admin 密码:Harbor12345

- 上传下拉镜像测试: 在其它机器上:

docker login 172.21.248.242

输入用户名和密码即可登入。

- 从网方镜像库下载一个centos的镜像并推送到私有仓库:

docker pull centos

docker tag centos:latest 172.21.248.242/library/centos:latest

docker push 172.21.248.242/library/centos:latest

在web界面上就可以看到镜像了

- 拉镜像:

docker pull 172.21.248.242/library/centos:latest

3. 安装及配置etcd

3.1 创建etcd证书

以下操作在sh-saas-cvmk8s-master-01上执行即可:

3.1.1 设置cfssl环境

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

chmod +x cfssljson_linux-amd64

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

chmod +x cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

export PATH=/usr/local/bin:$PATH

3.1.2 创建 CA 配置文件

下面证书内配置的IP为etcd节点的IP,为方便以后扩容,可考虑多配置几个预留IP地址,如考虑以后需要使用域名,可把域名也配置上去:

mkdir -p /data/k8s/ssl

cd /data/k8s/ssl

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "8760h"

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "shanghai",

"L": "shanghai",

"O": "k8s",

"OU": "System"

}]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

[root@sh-saas-cvmk8s-master-01 ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

2018/06/27 15:16:02 [INFO] generating a new CA key and certificate from CSR

2018/06/27 15:16:02 [INFO] generate received request

2018/06/27 15:16:02 [INFO] received CSR

2018/06/27 15:16:02 [INFO] generating key: rsa-2048

2018/06/27 15:16:03 [INFO] encoded CSR

2018/06/27 15:16:03 [INFO] signed certificate with serial number 527592143849763980635125886376161678689174991664

[root@sh-saas-cvmk8s-master-01 ssl]# ll

total 20

-rw-r--r-- 1 root root 290 Jun 27 15:14 ca-config.json

-rw-r--r-- 1 root root 1005 Jun 27 15:16 ca.csr

-rw-r--r-- 1 root root 190 Jun 27 15:15 ca-csr.json

-rw------- 1 root root 1679 Jun 27 15:16 ca-key.pem

-rw-r--r-- 1 root root 1363 Jun 27 15:16 ca.pem

[root@sh-saas-cvmk8s-master-01 ssl]#

cat > etcd-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"10.12.96.3",

"10.12.96.5",

"10.12.96.13",

"10.12.96.100",

"10.12.96.10",

"10.12.96.11"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "shanghai",

"L": "shanghai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes etcd-csr.json | cfssljson -bare etcd

[root@sh-saas-cvmk8s-master-01 ssl]# ll

total 36

-rw-r--r-- 1 root root 290 Jun 27 15:14 ca-config.json

-rw-r--r-- 1 root root 1005 Jun 27 15:16 ca.csr

-rw-r--r-- 1 root root 190 Jun 27 15:15 ca-csr.json

-rw------- 1 root root 1679 Jun 27 15:16 ca-key.pem

-rw-r--r-- 1 root root 1363 Jun 27 15:16 ca.pem

-rw-r--r-- 1 root root 1135 Jun 27 15:20 etcd.csr

-rw-r--r-- 1 root root 391 Jun 27 15:20 etcd-csr.json

-rw------- 1 root root 1675 Jun 27 15:20 etcd-key.pem

-rw-r--r-- 1 root root 1509 Jun 27 15:20 etcd.pem

3.1.3 把证书复制到另外2个etcd节点上

mkdir -p /etc/etcd/ssl

cp etcd.pem etcd-key.pem ca.pem /etc/etcd/ssl/

ssh -n 10.12.96.5 "mkdir -p /etc/etcd/ssl && exit"

ssh -n 10.12.96.13 "mkdir -p /etc/etcd/ssl && exit"

scp -r /etc/etcd/ssl/*.pem 10.12.96.5:/etc/etcd/ssl/

scp -r /etc/etcd/ssl/*.pem 10.12.96.13:/etc/etcd/ssl/

3.2 安装etcd

k8s v1.10测试的是etcd 3.1.12的版本,所以我们也使用这个版本,发现这个版本在YUM源上没有,只能下载二进制包安装了。 以下操作需在3台etcd节点(也就是master)节点上执行:

wget https://github.com/coreos/etcd/releases/download/v3.1.12/etcd-v3.1.12-linux-amd64.tar.gz

tar -xvf etcd-v3.1.12-linux-amd64.tar.gz

mv etcd-v3.1.12-linux-amd64/etcd* /usr/local/bin

#* 创建 etcd 的 systemd unit 文件。

cat > /usr/lib/systemd/system/etcd.service <<EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

ExecStart=/usr/local/bin/etcd \\

--name \${ETCD_NAME} \\

--cert-file=\${ETCD_CERT_FILE} \\

--key-file=\${ETCD_KEY_FILE} \\

--peer-cert-file=\${ETCD_PEER_CERT_FILE} \\

--peer-key-file=\${ETCD_PEER_KEY_FILE} \\

--trusted-ca-file=\${ETCD_TRUSTED_CA_FILE} \\

--peer-trusted-ca-file=\${ETCD_PEER_TRUSTED_CA_FILE} \\

--initial-advertise-peer-urls \${ETCD_INITIAL_ADVERTISE_PEER_URLS} \\

--listen-peer-urls \${ETCD_LISTEN_PEER_URLS} \\

--listen-client-urls \${ETCD_LISTEN_CLIENT_URLS} \\

--advertise-client-urls \${ETCD_ADVERTISE_CLIENT_URLS} \\

--initial-cluster-token \${ETCD_INITIAL_CLUSTER_TOKEN} \\

--initial-cluster \${ETCD_INITIAL_CLUSTER} \\

--initial-cluster-state new \\

--data-dir=\${ETCD_DATA_DIR}

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#etcd 配置文件,这是10.12.96.3节点的配置,另外两个etcd节点只要将上面的IP地址改成相应节点的IP地址即可。

#ETCD_NAME换成对应节点的infra1/2/3。

cat > /etc/etcd/etcd.conf <<EOF

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="https://10.12.96.3:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.12.96.3:2379,http://127.0.0.1:2379"

ETCD_NAME="infra1"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.12.96.3:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.12.96.3:2379"

ETCD_INITIAL_CLUSTER="infra1=https://10.12.96.3:2380,infra2=https://10.12.96.5:2380,infra3=https://10.12.96.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

ETCD_PEER_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

EOF

#etcd 配置文件,这是10.12.96.5节点的配置。

cat > /etc/etcd/etcd.conf <<EOF

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="https://10.12.96.5:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.12.96.5:2379,http://127.0.0.1:2379"

ETCD_NAME="infra2"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.12.96.5:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.12.96.5:2379"

ETCD_INITIAL_CLUSTER="infra1=https://10.12.96.3:2380,infra2=https://10.12.96.5:2380,infra3=https://10.12.96.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

ETCD_PEER_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

EOF

#etcd 配置文件,这是10.12.96.13节点的配置。

cat > /etc/etcd/etcd.conf <<EOF

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="https://10.12.96.13:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.12.96.13:2379,http://127.0.0.1:2379"

ETCD_NAME="infra3"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.12.96.13:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.12.96.13:2379"

ETCD_INITIAL_CLUSTER="infra1=https://10.12.96.3:2380,infra2=https://10.12.96.5:2380,infra3=https://10.12.96.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

ETCD_PEER_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

EOF

mkdir /var/lib/etcd/

systemctl daemon-reload

systemctl enable etcd

systemctl start etcd

systemctl status etcd

在三个etcd节点的服务都起来后,执行一下命令检查状态,如果像以下输出,说明配置成功。

etcdctl \

--ca-file=/etc/etcd/ssl/ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

cluster-health

2018-06-27 16:18:58.586432 I | warning: ignoring ServerName for user-provided CA for backwards compatibility is deprecated

2018-06-27 16:18:58.586965 I | warning: ignoring ServerName for user-provided CA for backwards compatibility is deprecated

member 8a0005e5779bed5f is healthy: got healthy result from https://10.12.96.13:2379

member 955000a144b11fb7 is healthy: got healthy result from https://10.12.96.5:2379

member d7001efc12afef41 is healthy: got healthy result from https://10.12.96.3:2379

cluster is healthy

4. master节点负载均衡配置

k8s master 的api server需要做负载均衡,否则会存在单点问题,在腾讯云上做负载均衡可以使用弹性IP加keepalived的方式,也可以使用腾讯云LB+haproxy的方式。

4.1 使用keepalived方式

我只测试了手动绑LB到其中一台master节点,配置keepalived,生产使用建议参考7.5,使VIP在故障时可以动态绑定。

4.1.1 安装keepalived

在3台master节点上安装keepalived:

yum install -y keepalived

systemctl enable keepalived

4.1.2 配置keepalived

- 在sh-saas-cvmk8s-master-01上生成配置文件

cat <<EOF > /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://10.12.96.100:6443"

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 61

priority 100

advert_int 1

mcast_src_ip 10.12.96.3

nopreempt

authentication {

auth_type PASS

auth_pass kwi28x7sx37uw72

}

unicast_peer {

10.12.96.5

10.12.96.13

}

virtual_ipaddress {

10.12.96.100/24

}

track_script {

CheckK8sMaster

}

}

EOF

- 在sh-saas-cvmk8s-master-02上生成配置文件

cat <<EOF > /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://10.12.96.100:6443"

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 61

priority 90

advert_int 1

mcast_src_ip 10.12.96.5

nopreempt

authentication {

auth_type PASS

auth_pass kwi28x7sx37uw72

}

unicast_peer {

10.12.96.3

10.12.96.13

}

virtual_ipaddress {

10.12.96.100/24

}

track_script {

CheckK8sMaster

}

}

EOF

- 在sh-saas-cvmk8s-master-03上生成配置文件

cat <<EOF > /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://10.12.96.100:6443"

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 61

priority 80

advert_int 1

mcast_src_ip 10.12.96.13

nopreempt

authentication {

auth_type PASS

auth_pass kwi28x7sx37uw72

}

unicast_peer {

10.12.96.3

10.12.96.5

}

virtual_ipaddress {

10.12.96.100/24

}

track_script {

CheckK8sMaster

}

}

EOF

4.1.3 启动验证keepalived

systemctl restart keepalived

[root@sh-saas-cvmk8s-master-01 k8s]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 52:54:00:52:fe:50 brd ff:ff:ff:ff:ff:ff

inet 10.12.96.3/24 brd 10.12.96.255 scope global eth0

valid_lft forever preferred_lft forever

inet 10.12.96.100/24 scope global secondary eth0

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:ed:6c:e3:ab brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 scope global docker0

valid_lft forever preferred_lft forever

[root@sh-saas-cvmk8s-master-01 k8s]#

可以看到VIP 10.12.96.100在sh-saas-cvmk8s-master-01上。 把sh-saas-cvmk8s-master-01关机,可以看到VIP到了sh-saas-cvmk8s-master-02上。

[root@sh-saas-cvmk8s-master-02 etcd]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 52:54:00:13:65:cf brd ff:ff:ff:ff:ff:ff

inet 10.12.96.5/24 brd 10.12.96.255 scope global eth0

valid_lft forever preferred_lft forever

inet 10.12.96.100/24 scope global secondary eth0

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:da:c8:18:2a brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 scope global docker0

valid_lft forever preferred_lft forever

4.2 使用腾讯云LB+haproxy方式

腾讯云的LB有一个问题,当LB后端的真实服务器访问LB的VIP地址时,是不通的。 所以我们需要另外找2台服务器安装haproxy,然后把这2台服务器加入到腾讯云的LB。并在腾讯云LB上配置k8s api server TCP安全端口 6443端口及TCP非安全端口 8088监听(如果tencentcloud-cloud-controller-manager使用ServiceAccount方式,可不启用非安全端口监听)。 以下是在2台服务器上安装及配置haproxy的步骤:

yum install haproxy

vim /etc/haproxy/haproxy.cfg

#在最后增加:

listen proxy_server_6443

bind 0.0.0.0:6443

mode tcp

balance roundrobin

server k8s_master1 10.12.96.3:6443 check inter 5000 rise 1 fall 2

server k8s_master2 10.12.96.5:6443 check inter 5000 rise 1 fall 2

server k8s_master3 10.12.96.13:6443 check inter 5000 rise 1 fall 2

listen proxy_server_8088

bind 0.0.0.0:8088

mode tcp

balance roundrobin

server k8s_master1 10.12.96.3:8088 check inter 5000 rise 1 fall 2

server k8s_master2 10.12.96.5:8088 check inter 5000 rise 1 fall 2

server k8s_master3 10.12.96.13:8088 check inter 5000 rise 1 fall 2

#启动haproxy

/etc/init.d/haproxy start

5. 使用kubeadm安装及配置k8s集群

5.1 下载官方镜像

由于官方镜像在国内访问非常慢或不能访问,所以先把镜像拉到私有仓库:

docker pull anjia0532/pause-amd64:3.1

docker pull anjia0532/google-containers.kube-apiserver-amd64:v1.10.5

docker pull anjia0532/google-containers.kube-proxy-amd64:v1.10.5

docker pull anjia0532/google-containers.kube-scheduler-amd64:v1.10.5

docker pull anjia0532/google-containers.kube-controller-manager-amd64:v1.10.5

docker pull anjia0532/k8s-dns-sidecar-amd64:1.14.8

docker pull anjia0532/k8s-dns-kube-dns-amd64:1.14.8

docker pull anjia0532/k8s-dns-dnsmasq-nanny-amd64:1.14.8

docker pull anjia0532/kubernetes-dashboard-amd64:v1.8.3

docker pull anjia0532/heapster-influxdb-amd64:v1.3.3

docker pull anjia0532/heapster-grafana-amd64:v4.4.3

docker pull anjia0532/heapster-amd64:v1.5.3

docker pull anjia0532/etcd-amd64:3.1.12

docker pull anjia0532/fluentd-elasticsearch:v2.0.4

docker pull anjia0532/cluster-autoscaler:v1.2.0

docker tag anjia0532/pause-amd64:3.1 172.21.248.242/k8s/pause-amd64:3.1

docker tag anjia0532/google-containers.kube-apiserver-amd64:v1.10.5 172.21.248.242/k8s/kube-apiserver-amd64:v1.10.5

docker tag anjia0532/google-containers.kube-proxy-amd64:v1.10.5 172.21.248.242/k8s/kube-proxy-amd64:v1.10.5

docker tag anjia0532/google-containers.kube-scheduler-amd64:v1.10.5 172.21.248.242/k8s/kube-scheduler-amd64:v1.10.5

docker tag anjia0532/google-containers.kube-controller-manager-amd64:v1.10.5 172.21.248.242/k8s/kube-controller-manager-amd64:v1.10.5

docker tag anjia0532/k8s-dns-sidecar-amd64:1.14.8 172.21.248.242/k8s/k8s-dns-sidecar-amd64:1.14.8

docker tag anjia0532/k8s-dns-kube-dns-amd64:1.14.8 172.21.248.242/k8s/k8s-dns-kube-dns-amd64:1.14.8

docker tag anjia0532/k8s-dns-dnsmasq-nanny-amd64:1.14.8 172.21.248.242/k8s/k8s-dns-dnsmasq-nanny-amd64:1.14.8

docker tag anjia0532/kubernetes-dashboard-amd64:v1.8.3 172.21.248.242/k8s/kubernetes-dashboard-amd64:v1.8.3

docker tag anjia0532/heapster-influxdb-amd64:v1.3.3 172.21.248.242/k8s/heapster-influxdb-amd64:v1.3.3

docker tag anjia0532/heapster-grafana-amd64:v4.4.3 172.21.248.242/k8s/heapster-grafana-amd64:v4.4.3

docker tag anjia0532/heapster-amd64:v1.5.3 172.21.248.242/k8s/heapster-amd64:v1.5.3

docker tag anjia0532/etcd-amd64:3.1.12 172.21.248.242/k8s/etcd-amd64:3.1.12

docker tag anjia0532/fluentd-elasticsearch:v2.0.4 172.21.248.242/k8s/fluentd-elasticsearch:v2.0.4

docker tag anjia0532/cluster-autoscaler:v1.2.0 172.21.248.242/k8s/cluster-autoscaler:v1.2.0

docker push 172.21.248.242/k8s/pause-amd64:3.1

docker push 172.21.248.242/k8s/kube-apiserver-amd64:v1.10.5

docker push 172.21.248.242/k8s/kube-proxy-amd64:v1.10.5

docker push 172.21.248.242/k8s/kube-scheduler-amd64:v1.10.5

docker push 172.21.248.242/k8s/kube-controller-manager-amd64:v1.10.5

docker push 172.21.248.242/k8s/k8s-dns-sidecar-amd64:1.14.8

docker push 172.21.248.242/k8s/k8s-dns-kube-dns-amd64:1.14.8

docker push 172.21.248.242/k8s/k8s-dns-dnsmasq-nanny-amd64:1.14.8

docker push 172.21.248.242/k8s/kubernetes-dashboard-amd64:v1.8.3

docker push 172.21.248.242/k8s/heapster-influxdb-amd64:v1.3.3

docker push 172.21.248.242/k8s/heapster-grafana-amd64:v4.4.3

docker push 172.21.248.242/k8s/heapster-amd64:v1.5.3

docker push 172.21.248.242/k8s/etcd-amd64:3.1.12

docker push 172.21.248.242/k8s/fluentd-elasticsearch:v2.0.4

docker push 172.21.248.242/k8s/cluster-autoscaler:v1.2.0

5.2 安装kubelet kubeadm kubectl

在所有节点上安装:

yum install -y kubelet-1.10.5-0.x86_64 kubeadm-1.10.5-0.x86_64 kubectl-1.10.5-0.x86_64

所有节点修改kubelet配置文件 kubelet配置文件在/etc/systemd/system/kubelet.service.d/10-kubeadm.conf内 可通过新建一个/etc/systemd/system/kubelet.service.d/20-kubeadm.conf配置文件覆盖老的配置 kubeadm默认只支持cni网络,不支持kubenet,但是腾讯CCM需要kubenet,所以在这里配置成kubenet。 –hostname-override=10.12.96.3每台机器都修改成自己的IP

printf '[Service]\nEnvironment="KUBELET_NETWORK_ARGS=--network-plugin=kubenet"\nEnvironment="KUBELET_DNS_ARGS=--cluster-dns=10.254.255.10 --cluster-domain=cluster.local"\n' > /etc/systemd/system/kubelet.service.d/20-kubeadm.conf

printf 'Environment="KUBELET_EXTRA_ARGS=--v=2 --fail-swap-on=false --pod-infra-container-image=172.21.248.242/k8s/pause-amd64:3.1 --cloud-provider=external --hostname-override=10.12.96.6"\n' >> /etc/systemd/system/kubelet.service.d/20-kubeadm.conf

printf 'Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"\n' >> /etc/systemd/system/kubelet.service.d/20-kubeadm.conf

最终配置:

[root@sh-saas-cvmk8s-master-01 ~]# cat /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_SYSTEM_PODS_ARGS=--pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true"

Environment="KUBELET_NETWORK_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_DNS_ARGS=--cluster-dns=10.96.0.10 --cluster-domain=cluster.local"

Environment="KUBELET_AUTHZ_ARGS=--authorization-mode=Webhook --client-ca-file=/etc/kubernetes/pki/ca.crt"

Environment="KUBELET_CADVISOR_ARGS=--cadvisor-port=0"

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=systemd"

Environment="KUBELET_CERTIFICATE_ARGS=--rotate-certificates=true --cert-dir=/var/lib/kubelet/pki"

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_SYSTEM_PODS_ARGS $KUBELET_NETWORK_ARGS $KUBELET_DNS_ARGS $KUBELET_AUTHZ_ARGS $KUBELET_CADVISOR_ARGS $KUBELET_CGROUP_ARGS $KUBELET_CERTIFICATE_ARGS $KUBELET_EXTRA_ARGS

[root@sh-saas-cvmk8s-master-01 ~]# cat /etc/systemd/system/kubelet.service.d/20-kubeadm.conf

[Service]

Environment="KUBELET_NETWORK_ARGS=--network-plugin=kubenet"

Environment="KUBELET_DNS_ARGS=--cluster-dns=10.254.255.10 --cluster-domain=cluster.local"

Environment="KUBELET_EXTRA_ARGS=--v=2 --fail-swap-on=false --pod-infra-container-image=172.21.248.242/k8s/pause-amd64:3.1 --cloud-provider=external --hostname-override=10.12.96.2"

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"

让配置生效:

systemctl daemon-reload

systemctl enable kubelet

启用命令行自动补全:

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

source <(kubeadm completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

echo "source <(kubeadm completion bash)" >> ~/.bashrc

5.3 通过kubeadm配置集群

5.3.1 生成kubeadm配置文件

在master节点sh-saas-cvmk8s-master-01,sh-saas-cvmk8s-master-02,sh-saas-cvmk8s-master-03上生成kubeadm配置文件:

cat <<EOF > config.yaml

apiVersion: kubeadm.k8s.io/v1alpha1

kind: MasterConfiguration

cloudProvider: external

nodeName: 10.12.96.13

etcd:

endpoints:

- https://10.12.96.3:2379

- https://10.12.96.5:2379

- https://10.12.96.13:2379

caFile: /etc/etcd/ssl/ca.pem

certFile: /etc/etcd/ssl/etcd.pem

keyFile: /etc/etcd/ssl/etcd-key.pem

dataDir: /var/lib/etcd

kubernetesVersion: 1.10.5

api:

advertiseAddress: "10.12.96.13"

controlPlaneEndpoint: "10.12.16.101:8443"

token: "uirbod.udq3j3mhciwevjhh"

tokenTTL: "0s"

apiServerCertSANs:

- sh-saas-cvmk8s-master-01

- sh-saas-cvmk8s-master-02

- sh-saas-cvmk8s-master-03

- sh-saas-cvmk8s-master-04

- sh-saas-cvmk8s-master-05

- 10.12.96.3

- 10.12.96.5

- 10.12.96.13

- 10.12.16.101

- 10.12.96.10

- 10.12.96.11

- api.cvmk8s.dev.internal.huilog.com

apiServerExtraArgs:

enable-swagger-ui: "true"

insecure-bind-address: 0.0.0.0

insecure-port: "8088"

networking:

dnsDomain: cluster.local

podSubnet: 10.254.0.0/16

serviceSubnet: 10.254.255.0/24

featureGates:

CoreDNS: true

imageRepository: "172.21.248.242/k8s"

EOF

注意:

- token 可使用 kubeadm token generate生成并各节点保持一致。

- sh-saas-cvmk8s-master-04,sh-saas-cvmk8s-master-05,10.12.96.10,10.12.96.11为预留后期扩容master的节点

- 当tencentcloud-cloud-controller-manager使用非https接口与k8s api server通讯时,需增加insecure-bind-address及insecure-port参数

- nodeName需改成本机IP,tencentcloud-cloud-controller-manager要求节点名为IP地址

- advertiseAddress需改成本机IP,controlPlaneEndpoint参数为VIP的IP+端口

5.3.2 在sh-saas-cvmk8s-master-01上初始化集群

[root@sh-saas-cvmk8s-master-01 k8s]# kubeadm init --config config.yaml

[init] Using Kubernetes version: v1.10.5

[init] Using Authorization modes: [Node RBAC]

[init] WARNING: For cloudprovider integrations to work --cloud-provider must be set for all kubelets in the cluster.

(/etc/systemd/system/kubelet.service.d/10-kubeadm.conf should be edited for this purpose)

[preflight] Running pre-flight checks.

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 17.06.2-ce. Max validated version: 17.03

Flag --insecure-bind-address has been deprecated, This flag will be removed in a future version.

Flag --insecure-port has been deprecated, This flag will be removed in a future version.

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [10.12.96.3 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local sh-saas-cvmk8s-master-01 sh-saas-cvmk8s-master-02 sh-saas-cvmk8s-master-03 sh-saas-cvmk8s-master-04 sh-saas-cvmk8s-master-05 api.cvmk8s.dev.internal.huilog.com] and IPs [10.254.255.1 10.12.16.101 10.12.96.3 10.12.96.5 10.12.96.13 10.12.16.101 10.12.96.10 10.12.96.11]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 12.506839 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node 10.12.96.3 as master by adding a label and a taint

[markmaster] Master 10.12.96.3 tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: uirbod.udq3j3mhciwevjhh

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 10.12.16.101:6443 --token uirbod.udq3j3mhciwevjhh --discovery-token-ca-cert-hash sha256:8f9113bc0f118c3f4b675a2f0e3e18f0e091044ec74d5240b3c62e261cac6cae

按照提示,执行以下命令后,kubectl就可以直接运行了。

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

如果有问题,可以通过kubeadm reset重置到初始状态,然后再重新初始化

kubeadm reset

# kubeadm reset并不会初始化etcd上的数据信息,需要我们手动清一下,不清会有很多坑

systemctl stop etcd.service && rm -rf /var/lib/etcd/* && systemctl start etcd.service

# 然后可以重新执行创建

kubeadm init --config config.yaml

如果没有报错,可以看到以下信息:

[root@sh-saas-cvmk8s-master-01 k8s]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

10.12.96.3 Ready master 3m v1.10.5 <none> CentOS Linux 7 (Core) 3.10.0-862.3.3.el7.x86_64 docker://17.6.2

[root@sh-saas-cvmk8s-master-01 k8s]# kubectl get all --all-namespaces -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

kube-system pod/coredns-7997f8864c-gcjrz 0/1 Pending 0 2m <none> <none>

kube-system pod/coredns-7997f8864c-pfcxk 0/1 Pending 0 2m <none> <none>

kube-system pod/kube-apiserver-10.12.96.3 1/1 Running 0 1m <none> 10.12.96.3

kube-system pod/kube-controller-manager-10.12.96.3 1/1 Running 0 2m <none> 10.12.96.3

kube-system pod/kube-proxy-7bdn2 1/1 Running 0 2m <none> 10.12.96.3

kube-system pod/kube-scheduler-10.12.96.3 1/1 Running 0 2m <none> 10.12.96.3

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

default service/kubernetes ClusterIP 10.254.255.1 <none> 443/TCP 3m <none>

kube-system service/kube-dns ClusterIP 10.254.255.10 <none> 53/UDP,53/TCP 3m k8s-app=kube-dns

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

kube-system daemonset.apps/kube-proxy 1 1 1 1 1 <none> 3m kube-proxy 172.21.248.242/k8s/kube-proxy-amd64:v1.10.5 k8s-app=kube-proxy

NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

kube-system deployment.apps/coredns 2 2 2 0 3m coredns coredns/coredns:1.0.6 k8s-app=kube-dns

NAMESPACE NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

kube-system replicaset.apps/coredns-7997f8864c 2 2 0 2m coredns coredns/coredns:1.0.6 k8s-app=kube-dns,pod-template-hash=3553944207

5.3.3 其它二台master节点初始化

先把刚刚在master节点上生成的证书分发到其它的master节点,node节点不需要.

scp -r /etc/kubernetes/pki 10.12.96.3:/etc/kubernetes/

scp -r /etc/kubernetes/pki 10.12.96.13:/etc/kubernetes/

在其它二台master节点上初始化,注意kubeadm配置文件内nodeName需改成本机IP,tencentcloud-cloud-controller-manager要求节点名为IP地址:

[root@sh-saas-cvmk8s-master-02 k8s]# kubeadm init --config config.yaml

[init] Using Kubernetes version: v1.10.5

[init] Using Authorization modes: [Node RBAC]

[init] WARNING: For cloudprovider integrations to work --cloud-provider must be set for all kubelets in the cluster.

(/etc/systemd/system/kubelet.service.d/10-kubeadm.conf should be edited for this purpose)

[preflight] Running pre-flight checks.

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 17.06.2-ce. Max validated version: 17.03

Flag --insecure-bind-address has been deprecated, This flag will be removed in a future version.

Flag --insecure-port has been deprecated, This flag will be removed in a future version.

[preflight] Starting the kubelet service

[certificates] Using the existing ca certificate and key.

[certificates] Using the existing apiserver certificate and key.

[certificates] Using the existing apiserver-kubelet-client certificate and key.

[certificates] Using the existing sa key.

[certificates] Using the existing front-proxy-ca certificate and key.

[certificates] Using the existing front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 0.019100 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node 10.12.96.5 as master by adding a label and a taint

[markmaster] Master 10.12.96.5 tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: uirbod.udq3j3mhciwevjhh

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 10.12.16.101:6443 --token uirbod.udq3j3mhciwevjhh --discovery-token-ca-cert-hash sha256:8f9113bc0f118c3f4b675a2f0e3e18f0e091044ec74d5240b3c62e261cac6cae

[root@sh-saas-cvmk8s-master-03 k8s]# kubeadm init --config config.yaml

[init] Using Kubernetes version: v1.10.5

[init] Using Authorization modes: [Node RBAC]

[init] WARNING: For cloudprovider integrations to work --cloud-provider must be set for all kubelets in the cluster.

(/etc/systemd/system/kubelet.service.d/10-kubeadm.conf should be edited for this purpose)

[preflight] Running pre-flight checks.

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 17.06.2-ce. Max validated version: 17.03

Flag --insecure-bind-address has been deprecated, This flag will be removed in a future version.

Flag --insecure-port has been deprecated, This flag will be removed in a future version.

[preflight] Starting the kubelet service

[certificates] Using the existing ca certificate and key.

[certificates] Using the existing apiserver certificate and key.

[certificates] Using the existing apiserver-kubelet-client certificate and key.

[certificates] Using the existing sa key.

[certificates] Using the existing front-proxy-ca certificate and key.

[certificates] Using the existing front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 0.020281 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node 10.12.96.13 as master by adding a label and a taint

[markmaster] Master 10.12.96.13 tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: uirbod.udq3j3mhciwevjhh

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 10.12.16.101:6443 --token uirbod.udq3j3mhciwevjhh --discovery-token-ca-cert-hash sha256:8f9113bc0f118c3f4b675a2f0e3e18f0e091044ec74d5240b3c62e261cac6cae

我们可以发现生成的hash值是一样的。

现在查看k8s状态,可以看到有3个master节点了,而除coredns外,所有的容器比刚刚都多了2个,coredns本来就有2个。

[root@sh-saas-cvmk8s-master-01 k8s]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

10.12.96.13 Ready master 34s v1.10.5 <none> CentOS Linux 7 (Core) 3.10.0-862.3.3.el7.x86_64 docker://17.6.2

10.12.96.3 Ready master 10m v1.10.5 <none> CentOS Linux 7 (Core) 3.10.0-862.3.3.el7.x86_64 docker://17.6.2

10.12.96.5 Ready master 1m v1.10.5 <none> CentOS Linux 7 (Core) 3.10.0-862.3.3.el7.x86_64 docker://17.6.2

[root@sh-saas-cvmk8s-master-01 k8s]# kubectl get all --all-namespaces -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

kube-system pod/coredns-7997f8864c-gcjrz 0/1 Pending 0 9m <none> <none>

kube-system pod/coredns-7997f8864c-pfcxk 0/1 Pending 0 9m <none> <none>

kube-system pod/kube-apiserver-10.12.96.13 1/1 Running 0 38s <none> 10.12.96.13

kube-system pod/kube-apiserver-10.12.96.3 1/1 Running 0 8m <none> 10.12.96.3

kube-system pod/kube-apiserver-10.12.96.5 1/1 Running 0 1m <none> 10.12.96.5

kube-system pod/kube-controller-manager-10.12.96.13 1/1 Running 0 38s <none> 10.12.96.13

kube-system pod/kube-controller-manager-10.12.96.3 1/1 Running 0 9m <none> 10.12.96.3

kube-system pod/kube-controller-manager-10.12.96.5 1/1 Running 0 1m <none> 10.12.96.5

kube-system pod/kube-proxy-7bdn2 1/1 Running 0 9m <none> 10.12.96.3

kube-system pod/kube-proxy-mxvjf 1/1 Running 0 1m <none> 10.12.96.5

kube-system pod/kube-proxy-zvhm5 1/1 Running 0 41s <none> 10.12.96.13

kube-system pod/kube-scheduler-10.12.96.13 1/1 Running 0 38s <none> 10.12.96.13

kube-system pod/kube-scheduler-10.12.96.3 1/1 Running 0 9m <none> 10.12.96.3

kube-system pod/kube-scheduler-10.12.96.5 1/1 Running 0 1m <none> 10.12.96.5

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

default service/kubernetes ClusterIP 10.254.255.1 <none> 443/TCP 10m <none>

kube-system service/kube-dns ClusterIP 10.254.255.10 <none> 53/UDP,53/TCP 10m k8s-app=kube-dns

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

kube-system daemonset.apps/kube-proxy 3 3 3 3 3 <none> 10m kube-proxy 172.21.248.242/k8s/kube-proxy-amd64:v1.10.5 k8s-app=kube-proxy

NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

kube-system deployment.apps/coredns 2 2 2 0 10m coredns coredns/coredns:1.0.6 k8s-app=kube-dns

NAMESPACE NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

kube-system replicaset.apps/coredns-7997f8864c 2 2 0 9m coredns coredns/coredns:1.0.6 k8s-app=kube-dns,pod-template-hash=3553944207

[root@sh-saas-cvmk8s-master-01 k8s]#

PS:默认master不运行pod,通过以下命令可让master也运行pod,生产环境不建议这样使用。

kubectl taint nodes --all node-role.kubernetes.io/master-

5.3.4 node节点加入集群

在3台 node节点上,执行以下命令加入集群:

[root@sh-saas-cvmk8s-node-01 ~]# kubeadm join 10.12.16.101:6443 --token uirbod.udq3j3mhciwevjhh --discovery-token-ca-cert-hash sha256:8f9113bc0f118c3f4b675a2f0e3e18f0e091044ec74d5240b3c62e261cac6cae

[preflight] Running pre-flight checks.

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 17.06.2-ce. Max validated version: 17.03

[WARNING Hostname]: hostname "sh-saas-cvmk8s-node-01" could not be reached

[WARNING Hostname]: hostname "sh-saas-cvmk8s-node-01" lookup sh-saas-cvmk8s-node-01 on 183.60.83.19:53: no such host

[WARNING FileExisting-crictl]: crictl not found in system path

Suggestion: go get github.com/kubernetes-incubator/cri-tools/cmd/crictl

[preflight] Starting the kubelet service

[discovery] Trying to connect to API Server "10.12.16.101:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://10.12.16.101:6443"

[discovery] Requesting info from "https://10.12.16.101:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "10.12.16.101:6443"

[discovery] Successfully established connection with API Server "10.12.16.101:6443"

This node has joined the cluster:

* Certificate signing request was sent to master and a response

was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

[root@sh-saas-cvmk8s-node-02 ~]# kubeadm join 10.12.16.101:6443 --token uirbod.udq3j3mhciwevjhh --discovery-token-ca-cert-hash sha256:8f9113bc0f118c3f4b675a2f0e3e18f0e091044ec74d5240b3c62e261cac6cae

[preflight] Running pre-flight checks.

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 17.06.2-ce. Max validated version: 17.03

[WARNING Hostname]: hostname "sh-saas-cvmk8s-node-02" could not be reached

[WARNING Hostname]: hostname "sh-saas-cvmk8s-node-02" lookup sh-saas-cvmk8s-node-02 on 183.60.83.19:53: no such host

[WARNING FileExisting-crictl]: crictl not found in system path

Suggestion: go get github.com/kubernetes-incubator/cri-tools/cmd/crictl

[preflight] Starting the kubelet service

[discovery] Trying to connect to API Server "10.12.16.101:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://10.12.16.101:6443"

[discovery] Requesting info from "https://10.12.16.101:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "10.12.16.101:6443"

[discovery] Successfully established connection with API Server "10.12.16.101:6443"

This node has joined the cluster:

* Certificate signing request was sent to master and a response

was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

[root@sh-saas-cvmk8s-node-03 ~]# kubeadm join 10.12.16.101:6443 --token uirbod.udq3j3mhciwevjhh --discovery-token-ca-cert-hash sha256:8f9113bc0f118c3f4b675a2f0e3e18f0e091044ec74d5240b3c62e261cac6cae

[preflight] Running pre-flight checks.

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 17.06.2-ce. Max validated version: 17.03

[WARNING Hostname]: hostname "sh-saas-cvmk8s-node-03" could not be reached

[WARNING Hostname]: hostname "sh-saas-cvmk8s-node-03" lookup sh-saas-cvmk8s-node-03 on 183.60.83.19:53: no such host

[WARNING FileExisting-crictl]: crictl not found in system path

Suggestion: go get github.com/kubernetes-incubator/cri-tools/cmd/crictl

[preflight] Starting the kubelet service

[discovery] Trying to connect to API Server "10.12.16.101:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://10.12.16.101:6443"

[discovery] Requesting info from "https://10.12.16.101:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "10.12.16.101:6443"

[discovery] Successfully established connection with API Server "10.12.16.101:6443"

This node has joined the cluster:

* Certificate signing request was sent to master and a response

was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

# 现在可以看到3个node节点都加进来了

[root@sh-saas-cvmk8s-master-01 k8s]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

10.12.96.13 Ready master 17m v1.10.5 <none> CentOS Linux 7 (Core) 3.10.0-862.3.3.el7.x86_64 docker://17.6.2

10.12.96.2 Ready <none> 2m v1.10.5 <none> CentOS Linux 7 (Core) 3.10.0-862.3.3.el7.x86_64 docker://17.6.2

10.12.96.3 Ready master 26m v1.10.5 <none> CentOS Linux 7 (Core) 3.10.0-862.3.3.el7.x86_64 docker://17.6.2

10.12.96.4 Ready <none> 43s v1.10.5 <none> CentOS Linux 7 (Core) 3.10.0-862.3.3.el7.x86_64 docker://17.6.2

10.12.96.5 Ready master 18m v1.10.5 <none> CentOS Linux 7 (Core) 3.10.0-862.3.3.el7.x86_64 docker://17.6.2

10.12.96.6 Ready <none> 39s v1.10.5 <none> CentOS Linux 7 (Core) 3.10.0-862.3.3.el7.x86_64 docker://17.6.2

[root@sh-saas-cvmk8s-master-01 k8s]# kubectl get all --all-namespaces -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

kube-system pod/coredns-7997f8864c-gcjrz 0/1 Pending 0 1h <none> <none>

kube-system pod/coredns-7997f8864c-pfcxk 0/1 Pending 0 1h <none> <none>

kube-system pod/kube-apiserver-10.12.96.13 1/1 Running 0 1h <none> 10.12.96.13

kube-system pod/kube-apiserver-10.12.96.3 1/1 Running 0 1h <none> 10.12.96.3

kube-system pod/kube-apiserver-10.12.96.5 1/1 Running 0 1h <none> 10.12.96.5

kube-system pod/kube-controller-manager-10.12.96.13 1/1 Running 0 1h <none> 10.12.96.13

kube-system pod/kube-controller-manager-10.12.96.3 1/1 Running 0 1h <none> 10.12.96.3

kube-system pod/kube-controller-manager-10.12.96.5 1/1 Running 0 1h <none> 10.12.96.5

kube-system pod/kube-proxy-7bdn2 1/1 Running 0 1h <none> 10.12.96.3

kube-system pod/kube-proxy-7tz77 1/1 Running 0 50m <none> 10.12.96.2

kube-system pod/kube-proxy-fcq6t 1/1 Running 0 49m <none> 10.12.96.6

kube-system pod/kube-proxy-fxstm 1/1 Running 0 49m <none> 10.12.96.4

kube-system pod/kube-proxy-mxvjf 1/1 Running 0 1h <none> 10.12.96.5

kube-system pod/kube-proxy-zvhm5 1/1 Running 0 1h <none> 10.12.96.13

kube-system pod/kube-scheduler-10.12.96.13 1/1 Running 0 1h <none> 10.12.96.13

kube-system pod/kube-scheduler-10.12.96.3 1/1 Running 0 1h <none> 10.12.96.3

kube-system pod/kube-scheduler-10.12.96.5 1/1 Running 0 1h <none> 10.12.96.5

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

default service/kubernetes ClusterIP 10.254.255.1 <none> 443/TCP 1h <none>

kube-system service/kube-dns ClusterIP 10.254.255.10 <none> 53/UDP,53/TCP 1h k8s-app=kube-dns

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

kube-system daemonset.apps/kube-proxy 6 6 6 6 6 <none> 1h kube-proxy 172.21.248.242/k8s/kube-proxy-amd64:v1.10.5 k8s-app=kube-proxy

NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

kube-system deployment.apps/coredns 2 2 2 0 1h coredns coredns/coredns:1.0.6 k8s-app=kube-dns

NAMESPACE NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

kube-system replicaset.apps/coredns-7997f8864c 2 2 0 1h coredns coredns/coredns:1.0.6 k8s-app=kube-dns,pod-template-hash=3553944207

可以看到3个node节点已经加入到集群内了。

但是容器的IP都是none,coredns也是Pending状态,这是因为tencent cloud controller manager没有部署,网络还不通造成的。

5.4 部署tencent cloud controller manager

5.4.1 编译安装tencent cloud controller manager

只需要一台机器上执行:

yum install -y git go

mkdir -p /root/go/src/github.com/dbdd4us/

git clone https://github.com/dbdd4us/tencentcloud-cloud-controller-manager.git /root/go/src/github.com/dbdd4us/tencentcloud-cloud-controller-manager

#编译tencentcloud-cloud-controller-manager:

cd /root/go/src/github.com/dbdd4us/tencentcloud-cloud-controller-manager

go build -v

#编译tencentcloud-cloud-controller-manager route-ctl:

cd /root/go/src/github.com/dbdd4us/tencentcloud-cloud-controller-manager/route-ctl

go build -v

#安装route-ctl

cp /root/go/src/github.com/dbdd4us/tencentcloud-cloud-controller-manager/route-ctl/route-ctl /usr/local/bin/

5.4.2 创建路由表

参考:https://github.com/dbdd4us/tencentcloud-cloud-controller-manager/blob/master/route-ctl/README.md 10.254.0.0/16这个容器网段需要避免这些冲突:

- 同vpc的其它集群的集群网络cidr

- 所在vpc的cidr

- 所在vpc的子网路由的cidr

- route-ctl list 能看到的所有route table 的 cidr

配置route-ctl所需的环境变量并创建路由:

export QCloudSecretId=xxxxxxxxxx

export QCloudSecretKey=xxxxxxxxxx

export QCloudCcsAPIRegion=ap-shanghai

[root@sh-saas-cvmk8s-master-01 k8s]# route-ctl create --route-table-cidr-block 10.254.0.0/16 --route-table-name route-table-cvmk8s --vpc-id vpc-n0fkh7v2

INFO[0000] GET https://ccs.api.qcloud.com/v2/index.php?Action=CreateClusterRouteTable&IgnoreClusterCidrConflict=0&Nonce=5577006791947779410&Region=ap-shanghai&RouteTableCidrBlock=10.254.0.0%2F16&RouteTableName=route-table-cvmk8s&SecretId=xxxxxxxxxx&Signature=REHgrQ2c7C81gQZ%2BW3xJ6MlBX2VhWEZE20b%2BZ9ZagnU%3D&SignatureMethod=HmacSHA256&Timestamp=1530264382&VpcId=vpc-n0fkh7v2 200 {"code":0,"message":"","codeDesc":"Success","data":null} Action=CreateClusterRouteTable

[root@sh-saas-cvmk8s-master-01 k8s]#

[root@sh-saas-cvmk8s-master-01 k8s]# route-ctl list

RouteTableName RouteTableCidrBlock VpcId

route-table-cvmk8s 10.254.0.0/16 vpc-n0fkh7v2

5.4.3 创建tencent-cloud-controller-manager configmap

[root@sh-saas-cvmk8s-master-01 k8s]# echo -n "ap-shanghai" | base64

YXAtc2hhbmdoYWk=

[root@sh-saas-cvmk8s-master-01 k8s]# echo -n "xxxxxxxxxx" | base64

xxxxxxxxxx

[root@sh-saas-cvmk8s-master-01 k8s]# echo -n "xxxxxxxxxx" | base64

xxxxxxxxxx

[root@sh-saas-cvmk8s-master-01 k8s]# echo -n "route-table-cvmk8s" | base64

cm91dGUtdGFibGUtY3Ztazhz

[root@sh-saas-cvmk8s-master-01 k8s]#

cat <<EOF > tencent-cloud-controller-manager-configmap.yaml

apiVersion: v1

kind: Secret

metadata:

name: tencentcloud-cloud-controller-manager-config

namespace: kube-system

data:

# 需要注意的是,secret 的 value 需要进行 base64 编码

# echo -n "<REGION>" | base64

TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_REGION: "YXAtc2hhbmdoYWk="

TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_SECRET_ID: "xxxxxxxxxx"

TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_SECRET_KEY: "xxxxxxxxxx="

TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_CLUSTER_ROUTE_TABLE: "cm91dGUtdGFibGUtY3Ztazhz"

EOF

kubectl create -f tencent-cloud-controller-manager-configmap.yaml

5.4.4 创建tencent-cloud-controller-manager Deployment

tencent-cloud-controller-manager可以使用http或https两种方式与k8s master api server交互。

生产环境建议使用https方式,可通过配置serviceAccount来使用,更加安全。同时关闭api server的非安全端口。 kubeadm配置文件内更改以下两个参数可以关闭api server的非安全端口:

insecure-bind-address: 0.0.0.0

insecure-port: "0"

5.4.4.1 tencent-cloud-controller-manager http方式部署

cat <<EOF > tencent-cloud-controller-manager.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: tencentcloud-cloud-controller-manager

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 2

template:

metadata:

labels:

app: tencentcloud-cloud-controller-manager

spec:

dnsPolicy: Default

tolerations:

- key: "node.cloudprovider.kubernetes.io/uninitialized"

value: "true"

effect: "NoSchedule"

- key: "node.kubernetes.io/network-unavailable"

value: "true"

effect: "NoSchedule"

containers:

- image: ccr.ccs.tencentyun.com/library/tencentcloud-cloud-controller-manager:latest

name: tencentcloud-cloud-controller-manager

command:

- /bin/tencentcloud-cloud-controller-manager

- --cloud-provider=tencentcloud # 指定 cloud provider 为 tencentcloud

- --allocate-node-cidrs=true # 指定 cloud provider 为 tencentcloud 为 node 分配 cidr

- --cluster-cidr=10.254.0.0/16 # 集群 pod 所在网络,需要提前创建

- --master=http://10.12.16.101:8088/ # master 的非 https api 地址

- --cluster-name=kubernetes # 集群名称

- --configure-cloud-routes=true

- --allow-untagged-cloud=true

env:

- name: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_REGION

valueFrom:

secretKeyRef:

name: tencentcloud-cloud-controller-manager-config

key: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_REGION

- name: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_SECRET_ID

valueFrom:

secretKeyRef:

name: tencentcloud-cloud-controller-manager-config

key: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_SECRET_ID

- name: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_SECRET_KEY

valueFrom:

secretKeyRef:

name: tencentcloud-cloud-controller-manager-config

key: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_SECRET_KEY

- name: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_CLUSTER_ROUTE_TABLE

valueFrom:

secretKeyRef:

name: tencentcloud-cloud-controller-manager-config

key: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_CLUSTER_ROUTE_TABLE

EOF

kubectl create -f tencent-cloud-controller-manager.yaml

5.4.4.2 tencent-cloud-controller-manager https方式部署

#创建serviceAccount

cat <<EOF > cloud-controller-manager.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: cloud-controller-manager

namespace: kube-system

EOF

#创建role

cat <<EOF > cloud-controller-manager-roles.yaml

apiVersion: v1

items:

- apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:cloud-controller-manager

rules:

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- update

- apiGroups:

- ""

resources:

- nodes

verbs:

- '*'

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- ""

resources:

- services

verbs:

- list

- patch

- update

- watch

- apiGroups:

- ""

resources:

- serviceaccounts

verbs:

- create

- apiGroups:

- ""

resources:

- persistentvolumes

verbs:

- get

- list

- update

- watch

- apiGroups:

- ""

resources:

- endpoints

verbs:

- create

- get

- list

- watch

- update

- apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:cloud-node-controller

rules:

- apiGroups:

- ""

resources:

- nodes

verbs:

- delete

- get

- patch

- update

- list

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- update

- apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:pvl-controller

rules:

- apiGroups:

- ""

resources:

- persistentvolumes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- update

kind: List

metadata: {}

EOF

#创建role bind

cat <<EOF > cloud-controller-manager-role-bindings.yaml

apiVersion: v1

items:

- apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:cloud-node-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:cloud-node-controller

subjects:

- kind: ServiceAccount

name: cloud-node-controller

namespace: kube-system

- apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:pvl-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:pvl-controller

subjects:

- kind: ServiceAccount

name: pvl-controller

namespace: kube-system

- apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:cloud-controller-manager

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: cloud-controller-manager

namespace: kube-system

kind: List

metadata: {}

EOF

cat <<EOF > tencent-cloud-controller-manager-https.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: tencentcloud-cloud-controller-manager

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 2

template:

metadata:

labels:

app: tencentcloud-cloud-controller-manager

spec:

serviceAccountName: cloud-controller-manager

dnsPolicy: Default

tolerations:

- key: "node.cloudprovider.kubernetes.io/uninitialized"

value: "true"

effect: "NoSchedule"

- key: "node.kubernetes.io/network-unavailable"

value: "true"

effect: "NoSchedule"

containers:

- image: ccr.ccs.tencentyun.com/library/tencentcloud-cloud-controller-manager:latest

name: tencentcloud-cloud-controller-manager

command:

- /bin/tencentcloud-cloud-controller-manager

- --cloud-provider=tencentcloud # 指定 cloud provider 为 tencentcloud

- --allocate-node-cidrs=true # 指定 cloud provider 为 tencentcloud 为 node 分配 cidr

- --cluster-cidr=10.254.0.0/16 # 集群 pod 所在网络,需要提前创建

- --cluster-name=kubernetes # 集群名称

- --use-service-account-credentials

- --configure-cloud-routes=true

- --allow-untagged-cloud=true

env:

- name: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_REGION

valueFrom:

secretKeyRef:

name: tencentcloud-cloud-controller-manager-config

key: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_REGION

- name: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_SECRET_ID

valueFrom:

secretKeyRef:

name: tencentcloud-cloud-controller-manager-config

key: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_SECRET_ID

- name: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_SECRET_KEY

valueFrom:

secretKeyRef:

name: tencentcloud-cloud-controller-manager-config

key: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_SECRET_KEY

- name: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_CLUSTER_ROUTE_TABLE

valueFrom:

secretKeyRef:

name: tencentcloud-cloud-controller-manager-config

key: TENCENTCLOUD_CLOUD_CONTROLLER_MANAGER_CLUSTER_ROUTE_TABLE

EOF

kubectl create -f cloud-controller-manager.yaml

kubectl create -f cloud-controller-manager-roles.yaml

kubectl create -f cloud-controller-manager-role-bindings.yaml

kubectl create -f tencent-cloud-controller-manager-https.yaml

过个一两分钟后,我们可以看到coredns pods都成Running状态,容器的IP也都有了。

[root@sh-saas-cvmk8s-master-01 k8s]# kubectl get all --all-namespaces -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

kube-system pod/coredns-7997f8864c-gcjrz 1/1 Running 0 1h 10.254.2.2 10.12.96.13

kube-system pod/coredns-7997f8864c-pfcxk 1/1 Running 0 1h 10.254.4.2 10.12.96.4

kube-system pod/kube-apiserver-10.12.96.13 1/1 Running 0 1h 10.12.96.13 10.12.96.13

kube-system pod/kube-apiserver-10.12.96.3 1/1 Running 0 1h 10.12.96.3 10.12.96.3

kube-system pod/kube-apiserver-10.12.96.5 1/1 Running 0 1h 10.12.96.5 10.12.96.5

kube-system pod/kube-controller-manager-10.12.96.13 1/1 Running 0 1h 10.12.96.13 10.12.96.13

kube-system pod/kube-controller-manager-10.12.96.3 1/1 Running 0 1h 10.12.96.3 10.12.96.3

kube-system pod/kube-controller-manager-10.12.96.5 1/1 Running 0 1h 10.12.96.5 10.12.96.5

kube-system pod/kube-proxy-7bdn2 1/1 Running 0 1h 10.12.96.3 10.12.96.3

kube-system pod/kube-proxy-7tz77 1/1 Running 0 1h 10.12.96.2 10.12.96.2

kube-system pod/kube-proxy-fcq6t 1/1 Running 0 1h 10.12.96.6 10.12.96.6

kube-system pod/kube-proxy-fxstm 1/1 Running 0 1h 10.12.96.4 10.12.96.4

kube-system pod/kube-proxy-mxvjf 1/1 Running 0 1h 10.12.96.5 10.12.96.5

kube-system pod/kube-proxy-zvhm5 1/1 Running 0 1h 10.12.96.13 10.12.96.13

kube-system pod/kube-scheduler-10.12.96.13 1/1 Running 0 1h 10.12.96.13 10.12.96.13

kube-system pod/kube-scheduler-10.12.96.3 1/1 Running 0 1h 10.12.96.3 10.12.96.3

kube-system pod/kube-scheduler-10.12.96.5 1/1 Running 0 1h 10.12.96.5 10.12.96.5

kube-system pod/tencentcloud-cloud-controller-manager-5bd497dc5b-8qwt9 1/1 Running 0 3m 10.254.5.5 10.12.96.6

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

default service/kubernetes ClusterIP 10.254.255.1 <none> 443/TCP 1h <none>

kube-system service/kube-dns ClusterIP 10.254.255.10 <none> 53/UDP,53/TCP 1h k8s-app=kube-dns

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

kube-system daemonset.apps/kube-proxy 6 6 6 6 6 <none> 1h kube-proxy 172.21.248.242/k8s/kube-proxy-amd64:v1.10.5 k8s-app=kube-proxy

NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

kube-system deployment.apps/coredns 2 2 2 2 1h coredns coredns/coredns:1.0.6 k8s-app=kube-dns

kube-system deployment.apps/tencentcloud-cloud-controller-manager 1 1 1 1 3m tencentcloud-cloud-controller-manager ccr.ccs.tencentyun.com/library/tencentcloud-cloud-controller-manager:latest app=tencentcloud-cloud-controller-manager

NAMESPACE NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

kube-system replicaset.apps/coredns-7997f8864c 2 2 2 1h coredns coredns/coredns:1.0.6 k8s-app=kube-dns,pod-template-hash=3553944207

kube-system replicaset.apps/tencentcloud-cloud-controller-manager-5bd497dc5b 1 1 1 3m tencentcloud-cloud-controller-manager ccr.ccs.tencentyun.com/library/tencentcloud-cloud-controller-manager:latest app=tencentcloud-cloud-controller-manager,pod-template-hash=1680538716

5.4.5 容器内通信测试

通过下面的命令可进入容器,通过ping其它节点上的容器IP,以及公网IP、容器外的测试机IP等。发现都已经连通。

kubectl exec tencentcloud-cloud-controller-manager-5bd497dc5b-8qwt9 -n=kube-system -it -- sh

/ # ping 10.254.2.2

PING 10.254.2.2 (10.254.2.2): 56 data bytes

64 bytes from 10.254.2.2: seq=0 ttl=62 time=0.318 ms

64 bytes from 10.254.2.2: seq=1 ttl=62 time=0.312 ms

^C

--- 10.254.2.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.312/0.315/0.318 ms

/ # ping www.163.com

PING www.163.com (59.81.64.174): 56 data bytes

64 bytes from 59.81.64.174: seq=0 ttl=52 time=5.433 ms

64 bytes from 59.81.64.174: seq=1 ttl=52 time=5.398 ms

^C

--- www.163.com ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 5.398/5.415/5.433 ms

/ # ping 172.21.251.111

PING 172.21.251.111 (172.21.251.111): 56 data bytes

64 bytes from 172.21.251.111: seq=0 ttl=60 time=2.379 ms

64 bytes from 172.21.251.111: seq=1 ttl=60 time=2.229 ms

64 bytes from 172.21.251.111: seq=2 ttl=60 time=2.211 ms

^C

--- 172.21.251.111 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 2.211/2.273/2.379 ms

/ #

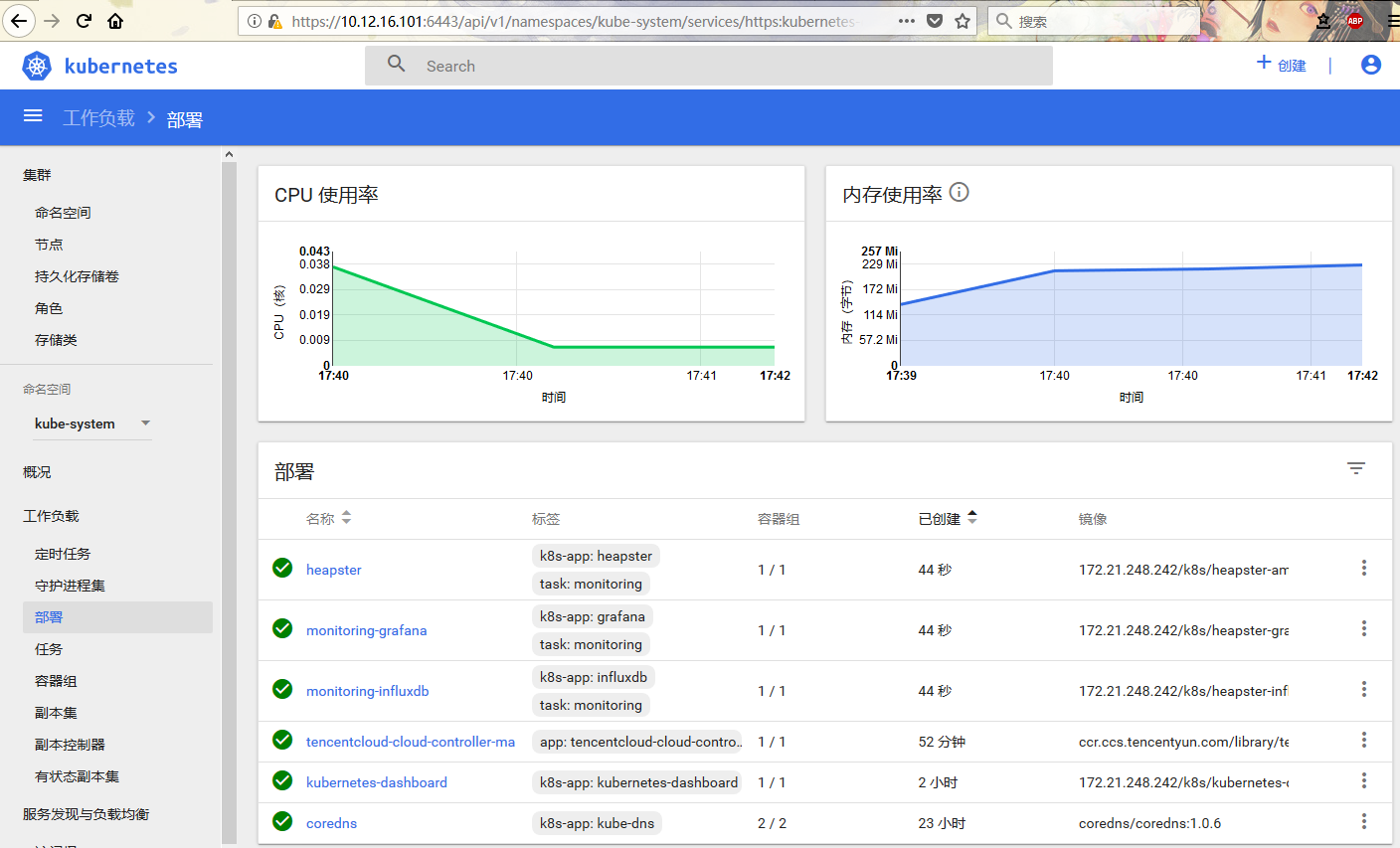

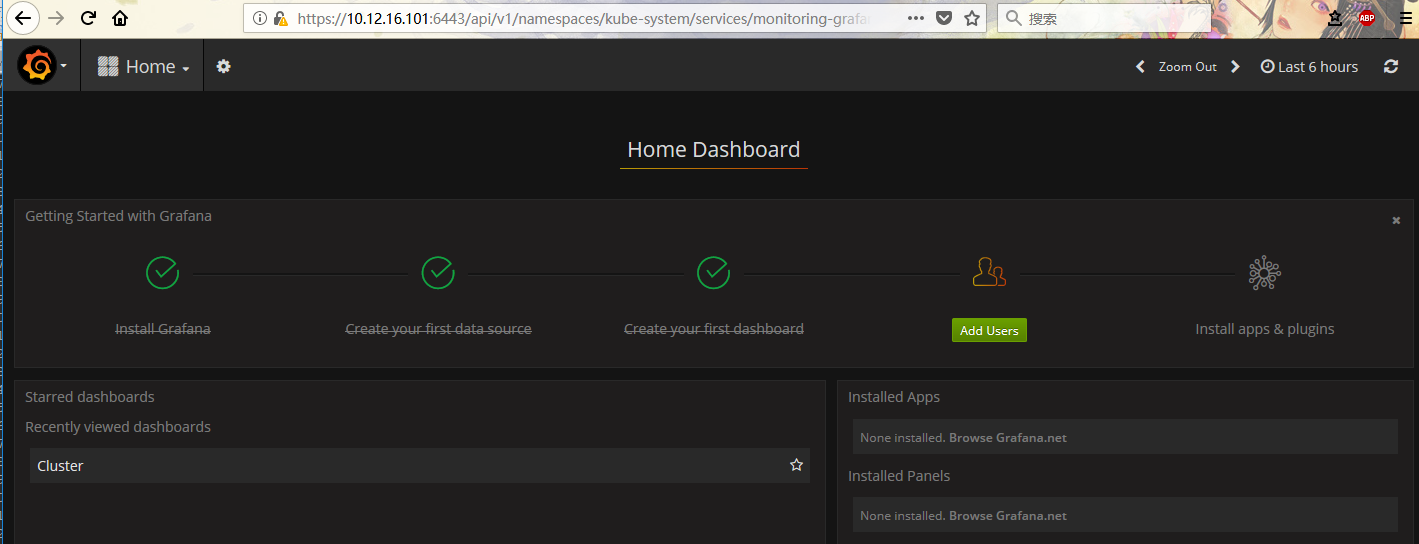

6. 其它kubernetes-dashboard等插件的安装及配置

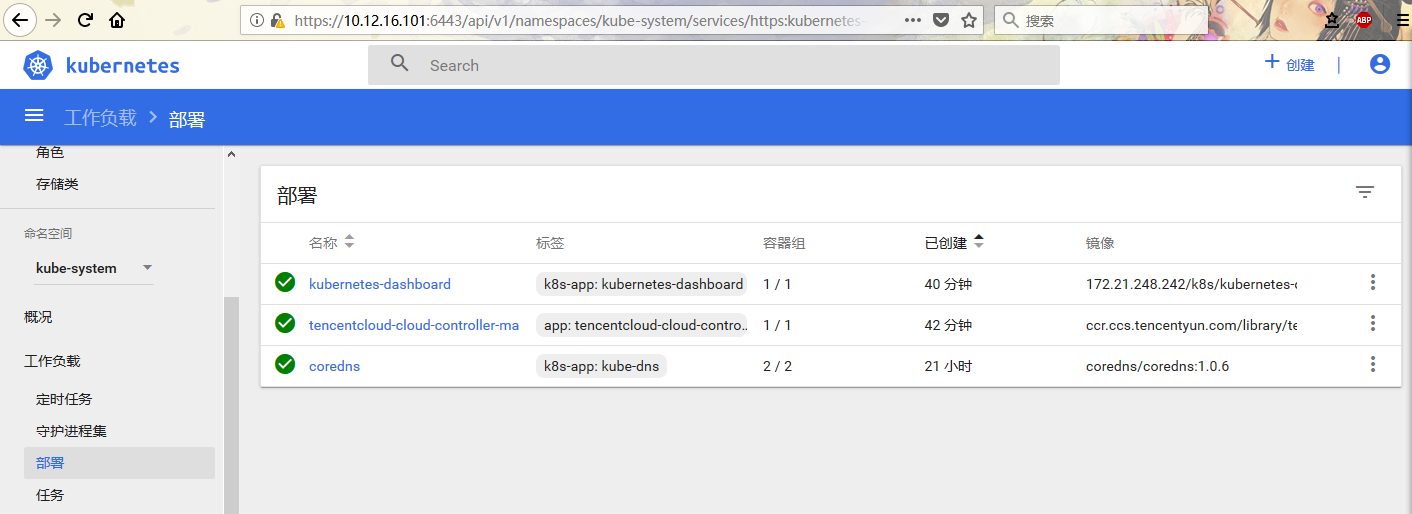

6.1 kubernetes-dashboard

kubernetes 1.10测试过的版本为:v1.8.3

[root@sh-saas-cvmk8s-master-01 k8s]# wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

[root@sh-saas-cvmk8s-master-01 k8s]# kubectl create -f kubernetes-dashboard.yaml

secret "kubernetes-dashboard-certs" created

serviceaccount "kubernetes-dashboard" created

role.rbac.authorization.k8s.io "kubernetes-dashboard-minimal" created

rolebinding.rbac.authorization.k8s.io "kubernetes-dashboard-minimal" created

deployment.apps "kubernetes-dashboard" created

service "kubernetes-dashboard" created

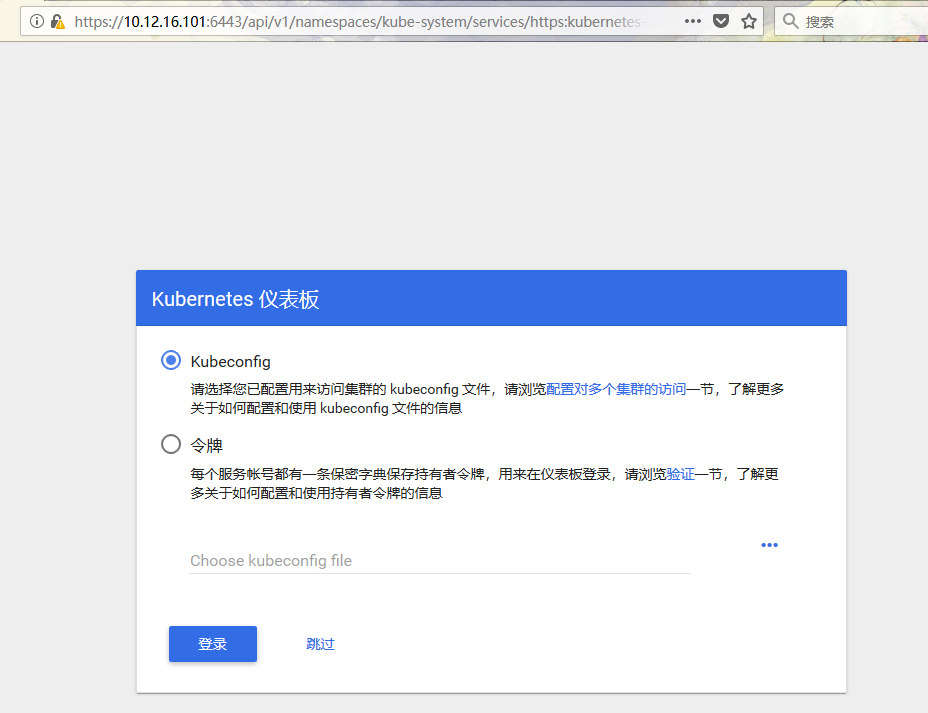

访问方式为:https://10.12.16.101:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/,打开后就报错了。

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "services \"https:kubernetes-dashboard:\" is forbidden: User \"system:anonymous\" cannot get services/proxy in the namespace \"kube-system\"",

"reason": "Forbidden",

"details": {

"name": "https:kubernetes-dashboard:",

"kind": "services"

},

"code": 403

}

用以下命令生成证书,并导入到浏览器上。

[root@sh-saas-cvmk8s-master-01 k8s]# cd /etc/kubernetes/pki/

[root@sh-saas-cvmk8s-master-01 pki]# ls

apiserver.crt apiserver-kubelet-client.crt ca.crt front-proxy-ca.crt front-proxy-client.crt sa.key

apiserver.key apiserver-kubelet-client.key ca.key front-proxy-ca.key front-proxy-client.key sa.pub

[root@sh-saas-cvmk8s-master-01 pki]# openssl pkcs12 -export -in apiserver-kubelet-client.crt -out apiserver-kubelet-client.p12 -inkey apiserver-kubelet-client.key

Enter Export Password:

Verifying - Enter Export Password:

[root@sh-saas-cvmk8s-master-01 pki]# ls

apiserver.crt apiserver-kubelet-client.crt apiserver-kubelet-client.p12 ca.key front-proxy-ca.key front-proxy-client.key sa.pub

apiserver.key apiserver-kubelet-client.key ca.crt front-proxy-ca.crt front-proxy-client.crt sa.key

[root@sh-saas-cvmk8s-master-01 pki]# sz apiserver-kubelet-client.p12

下面我们需要创建一个用户,然后才能找到这个用户的token登入:

# 创建一个ServiceAccount

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

EOF

#再创建一个ClusterRoleBinding,给admin-user相应的权限

cat <<EOF | kubectl create -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user