kubernetes V1.10集群安装及配置

目录

1. k8s集群系统规划

1.1. kubernetes 1.10的依赖

k8s V1.10对一些相关的软件包,如etcd,docker并不是全版本支持或全版本测试,建议的版本如下:

- docker: 1.11.2 to 1.13.1 and 17.03.x

- etcd: 3.1.12

- 全部信息如下:

- The supported etcd server version is 3.1.12, as compared to 3.0.17 in v1.9 (#60988)

- The validated docker versions are the same as for v1.9: 1.11.2 to 1.13.1 and 17.03.x (ref)

- The Go version is go1.9.3, as compared to go1.9.2 in v1.9. (#59012)

- The minimum supported go is the same as for v1.9: go1.9.1. (#55301)

- CNI is the same as v1.9: v0.6.0 (#51250)

- CSI is updated to 0.2.0 as compared to 0.1.0 in v1.9. (#60736)

- The dashboard add-on has been updated to v1.8.3, as compared to 1.8.0 in v1.9. (#57326)

- Heapster has is the same as v1.9: v1.5.0. It will be upgraded in v1.11. (ref)

- Cluster Autoscaler has been updated to v1.2.0. (#60842, @mwielgus)

- Updates kube-dns to v1.14.8 (#57918, @rramkumar1)

- Influxdb is unchanged from v1.9: v1.3.3 (#53319)

- Grafana is unchanged from v1.9: v4.4.3 (#53319)

- CAdvisor is v0.29.1 (#60867)

- fluentd-gcp-scaler is v0.3.0 (#61269)

- Updated fluentd in fluentd-es-image to fluentd v1.1.0 (#58525, @monotek)

- fluentd-elasticsearch is v2.0.4 (#58525)

- Updated fluentd-gcp to v3.0.0. (#60722)

- Ingress glbc is v1.0.0 (#61302)

- OIDC authentication is coreos/go-oidc v2 (#58544)

- Updated fluentd-gcp updated to v2.0.11. (#56927, @x13n)

- Calico has been updated to v2.6.7 (#59130, @caseydavenport)

1.2 测试服务器准备及环境规划

| 服务器名 | IP | 功 能 | 安装服务 |

|---|---|---|---|

| bs-ops-test-docker-dev-01 | 172.21.251.111 | master | master,etcd |

| bs-ops-test-docker-dev-02 | 172.21.251.112 | master | master,etcd |

| bs-ops-test-docker-dev-03 | 172.21.251.113 | node | node,etcd |

| bs-ops-test-docker-dev-04 | 172.21.248.242 | node,私有镜像仓库 | harbor,node |

| VIP | 172.21.255.100 | master vip | netmask:255.255.240.0 |

netmask都为:255.255.240.0 所有的测试服务器安装centos linux 7.4最新版本.

2. k8s集群安装前的准备工作

2.1 操作系统环境配置

在所有的服务器上操作:

- 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

- 关闭Swap

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

- 关闭SELinux

setenforce 0

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

- 开启网桥转发

modprobe br_netfilter

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

ls /proc/sys/net/bridge

- 配置K8S的yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

- 安装依赖包

yum install -y epel-release

yum install -y yum-utils device-mapper-persistent-data lvm2 net-tools conntrack-tools wget vim ntpdate libseccomp libtool-ltdl

- 安装bash命令提示

yum install -y bash-argsparse bash-completion bash-completion-extras

- 配置ntp

systemctl enable ntpdate.service

echo '*/30 * * * * /usr/sbin/ntpdate time7.aliyun.com >/dev/null 2>&1' > /tmp/crontab2.tmp

crontab /tmp/crontab2.tmp

systemctl start ntpdate.service

- 调整文件打开数等配置

echo "* soft nofile 65536" >> /etc/security/limits.conf

echo "* hard nofile 65536" >> /etc/security/limits.conf

echo "* soft nproc 65536" >> /etc/security/limits.conf

echo "* hard nproc 65536" >> /etc/security/limits.conf

echo "* soft memlock unlimited" >> /etc/security/limits.conf

echo "* hard memlock unlimited" >> /etc/security/limits.conf

2.2 安装docker

在所有node节点上安装docker17.03,注意:k8s v1.10经过测试的最高docker版本为17.03.x,不建议用其它版本。

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#yum-config-manager --enable docker-ce-edge

#yum-config-manager --enable docker-ce-test

#docker官方YUM安装有冲突,需要加参数:--setopt=obsoletes=0

yum install -y --setopt=obsoletes=0 docker-ce-17.03.2.ce-1.el7.centos docker-ce-selinux-17.03.2.ce-1.el7.centos

systemctl enable docker

systemctl start docker

vim /usr/lib/systemd/system/docker.service

#找到 ExecStart= 这一行,加上加速地址及私有镜像等,修改如下:

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock --registry-mirror=https://iw5fhz08.mirror.aliyuncs.com --insecure-registry 172.21.248.242

#重新加载配置

sudo systemctl daemon-reload

#重新启动Docker

sudo systemctl restart docker

2.3 安装harbor私有镜像仓库

以下操作只需要172.21.248.242服务器上执行:

yum -y install docker-compose

wget https://storage.googleapis.com/harbor-releases/release-1.4.0/harbor-offline-installer-v1.4.0.tgz

mkdir /data/

cd /data/

tar xvzf /root/harbor-offline-installer-v1.4.0.tgz

cd harbor

vim harbor.cfg

hostname = 172.21.248.242

sh install.sh

#如果要修改配置:

docker-compose down -v

vim harbor.cfg

prepare

docker-compose up -d

#重启:

docker-compose stop

docker-compose start

harbor的管理界面为:http://172.21.248.242 用户名:admin 密码:Harbor12345

上传下拉镜像测试: 在其它机器上:

docker login 172.21.248.242

输入用户名和密码即可登入。 – 从网方镜像库下载一个centos的镜像并推送到私有仓库:

docker pull centos

docker tag centos:latest 172.21.248.242/library/centos:latest

docker push 172.21.248.242/library/centos:latest

在web界面上就可以看到镜像了 – 拉镜像:

docker pull 172.21.248.242/library/centos:latest

3. 安装及配置etcd

以下操作在bs-ops-test-docker-dev-01上执行即可:

3.1 创建etcd证书

3.1.1 设置cfssl环境

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

chmod +x cfssljson_linux-amd64

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

chmod +x cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

export PATH=/usr/local/bin:$PATH

3.1.2 创建 CA 配置文件

下面证书内配置的IP为etcd节点的IP,为方便以后扩容,可考虑多配置几个预留IP地址:

mkdir /root/ssl

cd /root/ssl

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "8760h"

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "shanghai",

"L": "shanghai",

"O": "k8s",

"OU": "System"

}]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

[root@bs-ops-test-docker-dev-01 ssl]# ll

total 20

-rw-r--r-- 1 root root 290 Apr 29 19:46 ca-config.json

-rw-r--r-- 1 root root 1005 Apr 29 19:49 ca.csr

-rw-r--r-- 1 root root 190 Apr 29 19:48 ca-csr.json

-rw------- 1 root root 1675 Apr 29 19:49 ca-key.pem

-rw-r--r-- 1 root root 1363 Apr 29 19:49 ca.pem

cat > etcd-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"172.21.251.111",

"172.21.251.112",

"172.21.251.113",

"172.21.255.100",

"172.21.248.242"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "shanghai",

"L": "shanghai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes etcd-csr.json | cfssljson -bare etcd

[root@bs-ops-test-docker-dev-01 ssl]# ll

total 36

-rw-r--r-- 1 root root 290 Apr 29 19:46 ca-config.json

-rw-r--r-- 1 root root 1005 Apr 29 19:49 ca.csr

-rw-r--r-- 1 root root 190 Apr 29 19:48 ca-csr.json

-rw------- 1 root root 1675 Apr 29 19:49 ca-key.pem

-rw-r--r-- 1 root root 1363 Apr 29 19:49 ca.pem

-rw-r--r-- 1 root root 1082 Apr 29 19:53 etcd.csr

-rw-r--r-- 1 root root 348 Apr 29 19:53 etcd-csr.json

-rw------- 1 root root 1675 Apr 29 19:53 etcd-key.pem

-rw-r--r-- 1 root root 1456 Apr 29 19:53 etcd.pem

3.1.3 把证书放到3个etcd节点上

mkdir -p /etc/etcd/ssl

cp etcd.pem etcd-key.pem ca.pem /etc/etcd/ssl/

ssh -n bs-ops-test-docker-dev-02 "mkdir -p /etc/etcd/ssl && exit"

ssh -n bs-ops-test-docker-dev-03 "mkdir -p /etc/etcd/ssl && exit"

scp -r /etc/etcd/ssl/*.pem bs-ops-test-docker-dev-02:/etc/etcd/ssl/

scp -r /etc/etcd/ssl/*.pem bs-ops-test-docker-dev-03:/etc/etcd/ssl/

3.2 安装etcd

k8s v1.10测试的是etcd 3.1.12的版本,所以我们也使用这个版本,发现这个版本在YUM源上没有,只能下载二进制包安装了。

wget https://github.com/coreos/etcd/releases/download/v3.1.12/etcd-v3.1.12-linux-amd64.tar.gz

tar -xvf etcd-v3.1.12-linux-amd64.tar.gz

mv etcd-v3.1.12-linux-amd64/etcd* /usr/local/bin

#创建 etcd 的 systemd unit 文件。

cat > /usr/lib/systemd/system/etcd.service <<EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

ExecStart=/usr/local/bin/etcd \\

--name \${ETCD_NAME} \\

--cert-file=\${ETCD_CERT_FILE} \\

--key-file=\${ETCD_KEY_FILE} \\

--peer-cert-file=\${ETCD_PEER_CERT_FILE} \\

--peer-key-file=\${ETCD_PEER_KEY_FILE} \\

--trusted-ca-file=\${ETCD_TRUSTED_CA_FILE} \\

--peer-trusted-ca-file=\${ETCD_PEER_TRUSTED_CA_FILE} \\

--initial-advertise-peer-urls \${ETCD_INITIAL_ADVERTISE_PEER_URLS} \\

--listen-peer-urls \${ETCD_LISTEN_PEER_URLS} \\

--listen-client-urls \${ETCD_LISTEN_CLIENT_URLS} \\

--advertise-client-urls \${ETCD_ADVERTISE_CLIENT_URLS} \\

--initial-cluster-token \${ETCD_INITIAL_CLUSTER_TOKEN} \\

--initial-cluster \${ETCD_INITIAL_CLUSTER} \\

--initial-cluster-state new \\

--data-dir=\${ETCD_DATA_DIR}

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#etcd 配置文件,这是172.21.251.111节点的配置,他两个etcd节点只要将上面的IP地址改成相应节点的IP地址即可。

#ETCD_NAME换成对应节点的infra1/2/3。

cat > /etc/etcd/etcd.conf <<EOF

ETCD_DATA_DIR="/var/lib/etcd/"

ETCD_LISTEN_PEER_URLS="https://172.21.251.111:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.21.251.111:2379,http://127.0.0.1:2379"

ETCD_NAME="infra1"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.21.251.111:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.21.251.111:2379"

ETCD_INITIAL_CLUSTER="infra1=https://172.21.251.111:2380,infra2=https://172.21.251.112:2380,infra3=https://172.21.251.113:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

ETCD_PEER_CERT_FILE="/etc/etcd/ssl/etcd.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/ssl/etcd-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem"

EOF

mkdir /var/lib/etcd/

systemctl daemon-reload

systemctl enable etcd

systemctl start etcd

systemctl status etcd

在三个etcd节点的服务都起来后,执行一下命令检查状态,如果像以下输出,说明配置成功。

etcdctl \

--ca-file=/etc/etcd/ssl/ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

cluster-health

2018-04-29 23:55:29.773343 I | warning: ignoring ServerName for user-provided CA for backwards compatibility is deprecated

2018-04-29 23:55:29.774558 I | warning: ignoring ServerName for user-provided CA for backwards compatibility is deprecated

member 6c87a871854f88b is healthy: got healthy result from https://172.21.251.112:2379

member c5d2ced3329b39da is healthy: got healthy result from https://172.21.251.113:2379

member e6e88b355a379c5f is healthy: got healthy result from https://172.21.251.111:2379

cluster is healthy

4. 安装及配置keepalived

4.1 安装keepalived

在master节点bs-ops-test-docker-dev-01,bs-ops-test-docker-dev-02上安装keepalived:

yum install -y keepalived

systemctl enable keepalived

4.2 配置keepalived

- 在bs-ops-test-docker-dev-01上生成配置文件

cat <<EOF > /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://172.21.255.100:6443"

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 61

priority 100

advert_int 1

mcast_src_ip 172.21.251.111

nopreempt

authentication {

auth_type PASS

auth_pass kwi28x7sx37uw72

}

unicast_peer {

172.21.251.112

}

virtual_ipaddress {

172.21.255.100/20

}

track_script {

CheckK8sMaster

}

}

EOF

- 在bs-ops-test-docker-dev-02上生成配置文件

cat <<EOF > /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://172.21.255.100:6443"

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 61

priority 90

advert_int 1

mcast_src_ip 172.21.251.112

nopreempt

authentication {

auth_type PASS

auth_pass kwi28x7sx37uw72

}

unicast_peer {

172.21.251.111

}

virtual_ipaddress {

172.21.255.100/20

}

track_script {

CheckK8sMaster

}

}

EOF

4.3 启动验证keepalived

systemctl restart keepalived

[root@bs-ops-test-docker-dev-01 ~]# ip addr

1: lo: <loopback ,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <broadcast ,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 06:dd:4a:00:0a:70 brd ff:ff:ff:ff:ff:ff

inet 172.21.251.111/20 brd 172.21.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet 172.21.255.100/20 scope global secondary eth0

valid_lft forever preferred_lft forever

inet6 fe80::83a4:1f7:15d3:49eb/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::851c:69f8:270d:b510/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::a8c8:d941:f3c5:c36a/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

3: docker0: <no -CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:b8:b4:a0:b1 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 scope global docker0

valid_lft forever preferred_lft forever

可以看到VIP 172.21.255.100在bs-ops-test-docker-dev-01上。

5. 使用kubeadm安装及配置k8s集群

5.1 下载官方镜像

由于官方镜像在国内访问非常慢或不能访问,所以先把镜像拉到私有仓库:

docker pull anjia0532/pause-amd64:3.1

docker pull anjia0532/kube-apiserver-amd64:v1.10.2

docker pull anjia0532/kube-proxy-amd64:v1.10.2

docker pull anjia0532/kube-scheduler-amd64:v1.10.2

docker pull anjia0532/kube-controller-manager-amd64:v1.10.2

docker pull anjia0532/k8s-dns-sidecar-amd64:1.14.8

docker pull anjia0532/k8s-dns-kube-dns-amd64:1.14.8

docker pull anjia0532/k8s-dns-dnsmasq-nanny-amd64:1.14.8

docker pull anjia0532/kubernetes-dashboard-amd64:v1.8.3

docker pull anjia0532/heapster-influxdb-amd64:v1.3.3

docker pull anjia0532/heapster-grafana-amd64:v4.4.3

docker pull anjia0532/heapster-amd64:v1.5.2

docker pull anjia0532/etcd-amd64:3.1.12

docker pull anjia0532/fluentd-elasticsearch:v2.0.4

docker pull anjia0532/cluster-autoscaler:v1.2.0

docker tag anjia0532/pause-amd64:3.1 172.21.248.242/k8s/pause-amd64:3.1

docker tag anjia0532/kube-apiserver-amd64:v1.10.2 172.21.248.242/k8s/kube-apiserver-amd64:v1.10.2

docker tag anjia0532/kube-proxy-amd64:v1.10.2 172.21.248.242/k8s/kube-proxy-amd64:v1.10.2

docker tag anjia0532/kube-scheduler-amd64:v1.10.2 172.21.248.242/k8s/kube-scheduler-amd64:v1.10.2

docker tag anjia0532/kube-controller-manager-amd64:v1.10.2 172.21.248.242/k8s/kube-controller-manager-amd64:v1.10.2

docker tag anjia0532/k8s-dns-sidecar-amd64:1.14.8 172.21.248.242/k8s/k8s-dns-sidecar-amd64:1.14.8

docker tag anjia0532/k8s-dns-kube-dns-amd64:1.14.8 172.21.248.242/k8s/k8s-dns-kube-dns-amd64:1.14.8

docker tag anjia0532/k8s-dns-dnsmasq-nanny-amd64:1.14.8 172.21.248.242/k8s/k8s-dns-dnsmasq-nanny-amd64:1.14.8

docker tag anjia0532/kubernetes-dashboard-amd64:v1.8.3 172.21.248.242/k8s/kubernetes-dashboard-amd64:v1.8.3

docker tag anjia0532/heapster-influxdb-amd64:v1.3.3 172.21.248.242/k8s/heapster-influxdb-amd64:v1.3.3

docker tag anjia0532/heapster-grafana-amd64:v4.4.3 172.21.248.242/k8s/heapster-grafana-amd64:v4.4.3

docker tag anjia0532/heapster-amd64:v1.5.2 172.21.248.242/k8s/heapster-amd64:v1.5.2

docker tag anjia0532/etcd-amd64:3.1.12 172.21.248.242/k8s/etcd-amd64:3.1.12

docker tag anjia0532/fluentd-elasticsearch:v2.0.4 172.21.248.242/k8s/fluentd-elasticsearch:v2.0.4

docker tag anjia0532/cluster-autoscaler:v1.2.0 172.21.248.242/k8s/cluster-autoscaler:v1.2.0

docker push 172.21.248.242/k8s/pause-amd64:3.1

docker push 172.21.248.242/k8s/kube-apiserver-amd64:v1.10.2

docker push 172.21.248.242/k8s/kube-proxy-amd64:v1.10.2

docker push 172.21.248.242/k8s/kube-scheduler-amd64:v1.10.2

docker push 172.21.248.242/k8s/kube-controller-manager-amd64:v1.10.2

docker push 172.21.248.242/k8s/k8s-dns-sidecar-amd64:1.14.8

docker push 172.21.248.242/k8s/k8s-dns-kube-dns-amd64:1.14.8

docker push 172.21.248.242/k8s/k8s-dns-dnsmasq-nanny-amd64:1.14.8

docker push 172.21.248.242/k8s/kubernetes-dashboard-amd64:v1.8.3

docker push 172.21.248.242/k8s/heapster-influxdb-amd64:v1.3.3

docker push 172.21.248.242/k8s/heapster-grafana-amd64:v4.4.3

docker push 172.21.248.242/k8s/heapster-amd64:v1.5.2

docker push 172.21.248.242/k8s/etcd-amd64:3.1.12

docker push 172.21.248.242/k8s/fluentd-elasticsearch:v2.0.4

docker push 172.21.248.242/k8s/cluster-autoscaler:v1.2.0

5.2 安装kubelet kubeadm kubectl

在所有节点上安装:

yum install -y kubelet kubeadm kubectl

systemctl enable kubelet

所有节点修改kubelet配置文件

#修改这一行

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"

#添加这一行

Environment="KUBELET_EXTRA_ARGS=--v=2 --fail-swap-on=false --pod-infra-container-image=172.21.248.242/k8s/pause-amd64:3.1"

让配置生效:

systemctl daemon-reload

systemctl enable kubelet

启用命令行自动补全:

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source < (kubectl completion bash)

source <(kubeadm completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

echo "source < (kubeadm completion bash)" >> ~/.bashrc

5.3 通过kubeadm配置集群

5.3.1 生成kubeadm配置文件

在master节点bs-ops-test-docker-dev-01,bs-ops-test-docker-dev-02上生成kubeadm配置文件:

cat <<EOF > config.yaml

apiVersion: kubeadm.k8s.io/v1alpha1

kind: MasterConfiguration

etcd:

endpoints:

- https://172.21.251.111:2379

- https://172.21.251.112:2379

- https://172.21.251.113:2379

caFile: /etc/etcd/ssl/ca.pem

certFile: /etc/etcd/ssl/etcd.pem

keyFile: /etc/etcd/ssl/etcd-key.pem

dataDir: /var/lib/etcd

networking:

podSubnet: 10.244.0.0/16

kubernetesVersion: 1.10.2

api:

advertiseAddress: "172.21.255.100"

token: "465wc3.r1u0vv92d3slu4tf"

tokenTTL: "0s"

apiServerCertSANs:

- bs-ops-test-docker-dev-01

- bs-ops-test-docker-dev-02

- bs-ops-test-docker-dev-03

- bs-ops-test-docker-dev-04

- 172.21.251.111

- 172.21.251.112

- 172.21.251.113

- 172.21.248.242

- 172.21.255.100

featureGates:

CoreDNS: true

imageRepository: "172.21.248.242/k8s"

EOF

[root@bs-ops-test-docker-dev-01 ~]# kubeadm init --help | grep service

--service-cidr string Use alternative range of IP address for service VIPs. (default "10.96.0.0/12")

--service-dns-domain string Use alternative domain for services, e.g. "myorg.internal". (default "cluster.local")

注意: 通过上面的kubeadm init –help,可以看到kubectl服务的网段是10.96.0.0/12,所以在/etc/systemd/system/kubelet.service.d/10-kubeadm.conf内的cluster ip为:10.96.0.10。 这与我们配置的podSubnet: 10.244.0.0/16并不冲突,一个是用户使用的,一个是k8s服务使用的。

5.3.2 在bs-ops-test-docker-dev-01上初始化集群

[root@bs-ops-test-docker-dev-01 ~]# kubeadm init --config config.yaml

[init] Using Kubernetes version: v1.10.2

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

Suggestion: go get github.com/kubernetes-incubator/cri-tools/cmd/crictl

[preflight] Starting the kubelet service

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [bs-ops-test-docker-dev-01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local bs-ops-test-docker-dev-01 bs-ops-test-docker-dev-02 bs-ops-test-docker-dev-03 bs-ops-test-docker-dev-04] and IPs [10.96.0.1 172.21.255.100 172.21.251.111 172.21.251.112 172.21.251.113 172.21.248.242 172.21.255.100]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated etcd/ca certificate and key.

[certificates] Generated etcd/server certificate and key.

[certificates] etcd/server serving cert is signed for DNS names [localhost] and IPs [127.0.0.1]

[certificates] Generated etcd/peer certificate and key.

[certificates] etcd/peer serving cert is signed for DNS names [bs-ops-test-docker-dev-01] and IPs [172.21.255.100]

[certificates] Generated etcd/healthcheck-client certificate and key.

[certificates] Generated apiserver-etcd-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 20.501769 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node bs-ops-test-docker-dev-01 as master by adding a label and a taint

[markmaster] Master bs-ops-test-docker-dev-01 tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: 465wc3.r1u0vv92d3slu4tf

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 172.21.255.100:6443 --token 465wc3.r1u0vv92d3slu4tf --discovery-token-ca-cert-hash sha256:234294087f9598267de8f1b5f484c56463ae74d56c9484ecee3e6b6aa0f85fe1

按照提示,执行以下命令后,kubectl就可以直接运行了。

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

注意这个时候,node的状态会一直是NotReady,coredns也起不来,这是正常的,因为网络插件还没装。

[root@bs-ops-test-docker-dev-01 ~]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

bs-ops-test-docker-dev-01 NotReady master 17m v1.10.2 <none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://17.3.2

[root@bs-ops-test-docker-dev-01 ~]# kubectl get pods --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

kube-system coredns-7997f8864c-mc899 0/1 Pending 0 21m </none><none> </none><none>

kube-system coredns-7997f8864c-xgtj9 0/1 Pending 0 21m </none><none> </none><none>

kube-system kube-apiserver-bs-ops-test-docker-dev-01 1/1 Running 0 21m 172.21.251.111 bs-ops-test-docker-dev-01

kube-system kube-controller-manager-bs-ops-test-docker-dev-01 1/1 Running 0 22m 172.21.251.111 bs-ops-test-docker-dev-01

kube-system kube-proxy-c9tcq 1/1 Running 0 21m 172.21.251.111 bs-ops-test-docker-dev-01

kube-system kube-scheduler-bs-ops-test-docker-dev-01 1/1 Running 0 20m 172.21.251.111 bs-ops-test-docker-dev-01

如果有问题,可以通过kubeadm reset重置到初始状态,然后再重新初始化

[root@bs-ops-test-docker-dev-01 ~]# kubeadm reset

[preflight] Running pre-flight checks.

[reset] Stopping the kubelet service.

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Removing kubernetes-managed containers.

[reset] No etcd manifest found in "/etc/kubernetes/manifests/etcd.yaml". Assuming external etcd.

[reset] Deleting contents of stateful directories: [/var/lib/kubelet /etc/cni/net.d /var/lib/dockershim /var/run/kubernetes]

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

# kubeadm reset并不会初始化etcd上的数据信息,需要我们手动清一下,不清会有很多坑

systemctl stop etcd && rm -rf /var/lib/etcd/* && systemctl start etcd

[root@bs-ops-test-docker-dev-01 ~]# kubeadm init --config config.yaml

[init] Using Kubernetes version: v1.10.2

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

Suggestion: go get github.com/kubernetes-incubator/cri-tools/cmd/crictl

[preflight] Starting the kubelet service

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [bs-ops-test-docker-dev-01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local bs-ops-test-docker-dev-01 bs-ops-test-docker-dev-02 bs-ops-test-docker-dev-03 bs-ops-test-docker-dev-04] and IPs [10.96.0.1 172.21.255.100 172.21.251.111 172.21.251.112 172.21.251.113 172.21.248.242 172.21.255.100]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated etcd/ca certificate and key.

[certificates] Generated etcd/server certificate and key.

[certificates] etcd/server serving cert is signed for DNS names [localhost] and IPs [127.0.0.1]

[certificates] Generated etcd/peer certificate and key.

[certificates] etcd/peer serving cert is signed for DNS names [bs-ops-test-docker-dev-01] and IPs [172.21.255.100]

[certificates] Generated etcd/healthcheck-client certificate and key.

[certificates] Generated apiserver-etcd-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 29.001939 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node bs-ops-test-docker-dev-01 as master by adding a label and a taint

[markmaster] Master bs-ops-test-docker-dev-01 tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: 465wc3.r1u0vv92d3slu4tf

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 172.21.255.100:6443 --token 465wc3.r1u0vv92d3slu4tf --discovery-token-ca-cert-hash sha256:bbaea5bd68a1ba5002cf745aa21f1f42f0cef1d04ec2f10203500e4924932706

5.3.3 部署flannel

只需要bs-ops-test-docker-dev-01执行:

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

#版本信息:quay.io/coreos/flannel:v0.10.0-amd64

kubectl create -f kube-flannel.yml

过个一两分钟后,我们可以看到节点和pods都起来了,在我们安装网络插件flannel之前,coredns是起不来的状态

[root@bs-ops-test-docker-dev-01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

bs-ops-test-docker-dev-01 Ready master 6m v1.10.2

[root@bs-ops-test-docker-dev-01 ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7997f8864c-5wczb 1/1 Running 0 5m

kube-system coredns-7997f8864c-tvqrt 1/1 Running 0 5m

kube-system kube-apiserver-bs-ops-test-docker-dev-01 1/1 Running 0 5m

kube-system kube-controller-manager-bs-ops-test-docker-dev-01 1/1 Running 0 5m

kube-system kube-flannel-ds-n259k 1/1 Running 0 2m

kube-system kube-proxy-4cvhl 1/1 Running 0 5m

kube-system kube-scheduler-bs-ops-test-docker-dev-01 1/1 Running 0 5m

5.3.3 第二台master节点初始化

先把刚刚在master节点上生成的证书分发到其它的master节点,node节点不需要:

scp -r /etc/kubernetes/pki bs-ops-test-docker-dev-02:/etc/kubernetes/

在第二台master节点上初始化(文件内的token可使用’kubeadm token generate’生成):

cat <<EOF > config.yaml

apiVersion: kubeadm.k8s.io/v1alpha1

kind: MasterConfiguration

etcd:

endpoints:

- https://172.21.251.111:2379

- https://172.21.251.112:2379

- https://172.21.251.113:2379

caFile: /etc/etcd/ssl/ca.pem

certFile: /etc/etcd/ssl/etcd.pem

keyFile: /etc/etcd/ssl/etcd-key.pem

dataDir: /var/lib/etcd

networking:

podSubnet: 10.244.0.0/16

kubernetesVersion: 1.10.2

api:

advertiseAddress: "172.21.255.100"

token: "465wc3.r1u0vv92d3slu4tf"

tokenTTL: "0s"

apiServerCertSANs:

- bs-ops-test-docker-dev-01

- bs-ops-test-docker-dev-02

- bs-ops-test-docker-dev-03

- bs-ops-test-docker-dev-04

- 172.21.251.111

- 172.21.251.112

- 172.21.251.113

- 172.21.248.242

- 172.21.255.100

featureGates:

CoreDNS: true

imageRepository: "172.21.248.242/k8s"

EOF

[root@bs-ops-test-docker-dev-02 ~]# kubeadm init --config config.yaml

[init] Using Kubernetes version: v1.10.2

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

Suggestion: go get github.com/kubernetes-incubator/cri-tools/cmd/crictl

[preflight] Starting the kubelet service

[certificates] Using the existing ca certificate and key.

[certificates] Using the existing apiserver certificate and key.

[certificates] Using the existing apiserver-kubelet-client certificate and key.

[certificates] Using the existing etcd/ca certificate and key.

[certificates] Using the existing etcd/server certificate and key.

[certificates] Using the existing etcd/peer certificate and key.

[certificates] Using the existing etcd/healthcheck-client certificate and key.

[certificates] Using the existing apiserver-etcd-client certificate and key.

[certificates] Using the existing sa key.

[certificates] Using the existing front-proxy-ca certificate and key.

[certificates] Using the existing front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 0.013231 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node bs-ops-test-docker-dev-02 as master by adding a label and a taint

[markmaster] Master bs-ops-test-docker-dev-02 tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: 465wc3.r1u0vv92d3slu4tf

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 172.21.255.100:6443 --token 465wc3.r1u0vv92d3slu4tf --discovery-token-ca-cert-hash sha256:bbaea5bd68a1ba5002cf745aa21f1f42f0cef1d04ec2f10203500e4924932706

我们可以发现生成的hash值是一样的。

现在查看k8s状态,可以看到有2个master节点了,而除coredns外,所有的容器比刚刚都多了一个,coredns本来就有2个。

[root@bs-ops-test-docker-dev-02 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

bs-ops-test-docker-dev-01 Ready master 36m v1.10.2

bs-ops-test-docker-dev-02 Ready master 2m v1.10.2

[root@bs-ops-test-docker-dev-02 ~]# kubectl get all --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/coredns-7997f8864c-5wczb 1/1 Running 0 36m

kube-system pod/coredns-7997f8864c-tvqrt 1/1 Running 0 36m

kube-system pod/kube-apiserver-bs-ops-test-docker-dev-01 1/1 Running 0 35m

kube-system pod/kube-apiserver-bs-ops-test-docker-dev-02 1/1 Running 0 2m

kube-system pod/kube-controller-manager-bs-ops-test-docker-dev-01 1/1 Running 0 35m

kube-system pod/kube-controller-manager-bs-ops-test-docker-dev-02 1/1 Running 0 2m

kube-system pod/kube-flannel-ds-n259k 1/1 Running 0 32m

kube-system pod/kube-flannel-ds-r2b7k 1/1 Running 0 2m

kube-system pod/kube-proxy-4cvhl 1/1 Running 0 36m

kube-system pod/kube-proxy-zmgzm 1/1 Running 0 2m

kube-system pod/kube-scheduler-bs-ops-test-docker-dev-01 1/1 Running 0 35m

kube-system pod/kube-scheduler-bs-ops-test-docker-dev-02 1/1 Running 0 2m

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.96.0.1 </none><none> 443/TCP 36m

kube-system service/kube-dns ClusterIP 10.96.0.10 </none><none> 53/UDP,53/TCP 36m

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system daemonset.apps/kube-flannel-ds 2 2 2 2 2 beta.kubernetes.io/arch=amd64 32m

kube-system daemonset.apps/kube-proxy 2 2 2 2 2 </none><none> 36m

NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

kube-system deployment.apps/coredns 2 2 2 2 36m

NAMESPACE NAME DESIRED CURRENT READY AGE

kube-system replicaset.apps/coredns-7997f8864c 2 2 2 36m

默认master不运行pod,通过以下命令可让master也运行pod,生产环境不建议这样使用。

[root@bs-ops-test-docker-dev-02 ~]# kubectl taint nodes --all node-role.kubernetes.io/master-

node "bs-ops-test-docker-dev-01" untainted

node "bs-ops-test-docker-dev-02" untainted

5.3.4 node节点加入集群

在bs-ops-test-docker-dev-03 node节点上,执行以下命令加入集群:

[root@bs-ops-test-docker-dev-03 ~]# kubeadm join 172.21.255.100:6443 --token 465wc3.r1u0vv92d3slu4tf --discovery-token-ca-cert-hash sha256:bbaea5bd68a1ba5002cf745aa21f1f42f0cef1d04ec2f10203500e4924932706

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

Suggestion: go get github.com/kubernetes-incubator/cri-tools/cmd/crictl

[preflight] Starting the kubelet service

[discovery] Trying to connect to API Server "172.21.255.100:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://172.21.255.100:6443"

[discovery] Requesting info from "https://172.21.255.100:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "172.21.255.100:6443"

[discovery] Successfully established connection with API Server "172.21.255.100:6443"

This node has joined the cluster:

* Certificate signing request was sent to master and a response

was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

[root@bs-ops-test-docker-dev-02 ~]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

bs-ops-test-docker-dev-01 Ready master 48m v1.10.2 </none><none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://17.3.2

bs-ops-test-docker-dev-02 Ready master 13m v1.10.2 </none><none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://17.3.2

bs-ops-test-docker-dev-03 Ready </none><none> 1m v1.10.2 </none><none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://17.3.2

可以看到bs-ops-test-docker-dev-03节点已经加入到集群内了,很方便。

6. 其它kubernetes-dashboard等插件的安装及配置

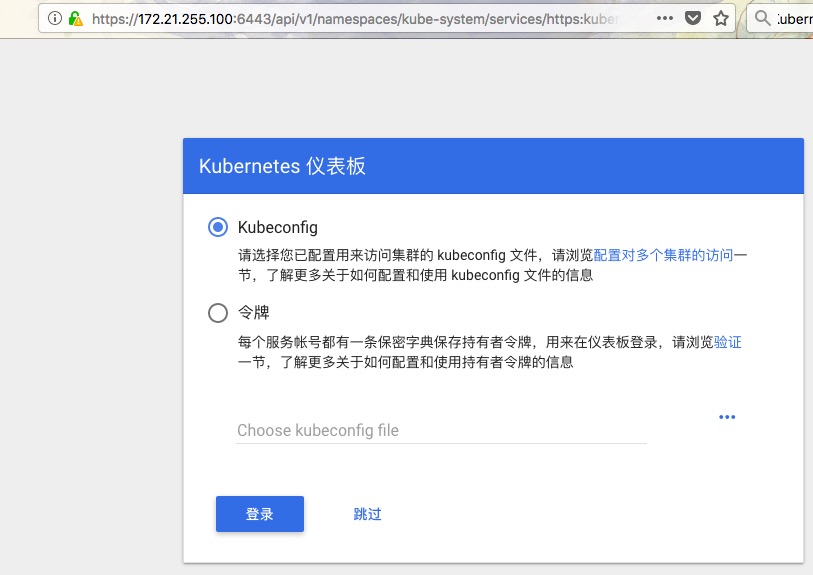

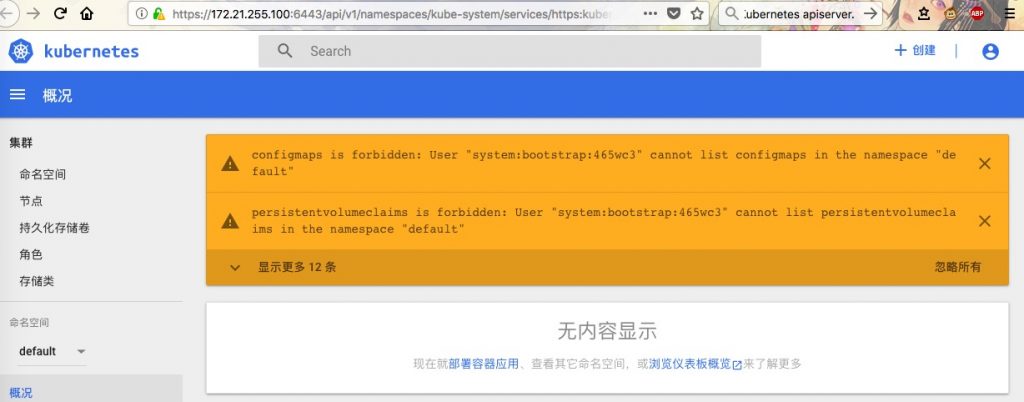

6.1 kubernetes-dashboard

kubernetes 1.10测试过的版本为:v1.8.3

wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

kubectl create -f kubernetes-dashboard.yaml

访问方式为:https://172.21.255.100:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/,打开后就报错了。

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "services \"https:kubernetes-dashboard:\" is forbidden: User \"system:anonymous\" cannot get services/proxy in the namespace \"kube-system\"",

"reason": "Forbidden",

"details": {

"name": "https:kubernetes-dashboard:",

"kind": "services"

},

"code": 403

}

用以下命令生成证书,并导入到浏览器上。

openssl pkcs12 -export -in apiserver-kubelet-client.crt -out apiserver-kubelet-client.p12 -inkey apiserver-kubelet-client.key

但是打开还是会报错:

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "no endpoints available for service \"https:kubernetes-dashboard:\"",

"reason": "ServiceUnavailable",

"code": 503

}

这个错误后面一查是由于容器挂了导致的,挂的原因是我把kubernetes-dashboard.yaml内的api-server那行取消注释了。再次注释掉那行,重新创建一下就OK了。

下面是找到token的方法:

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

解决办法:

# 创建一个ServiceAccount

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

EOF

#再创建一个ClusterRoleBinding,给admin-user相应的权限

cat <<EOF | kubectl create -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

EOF

# 查找admin-user的token

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

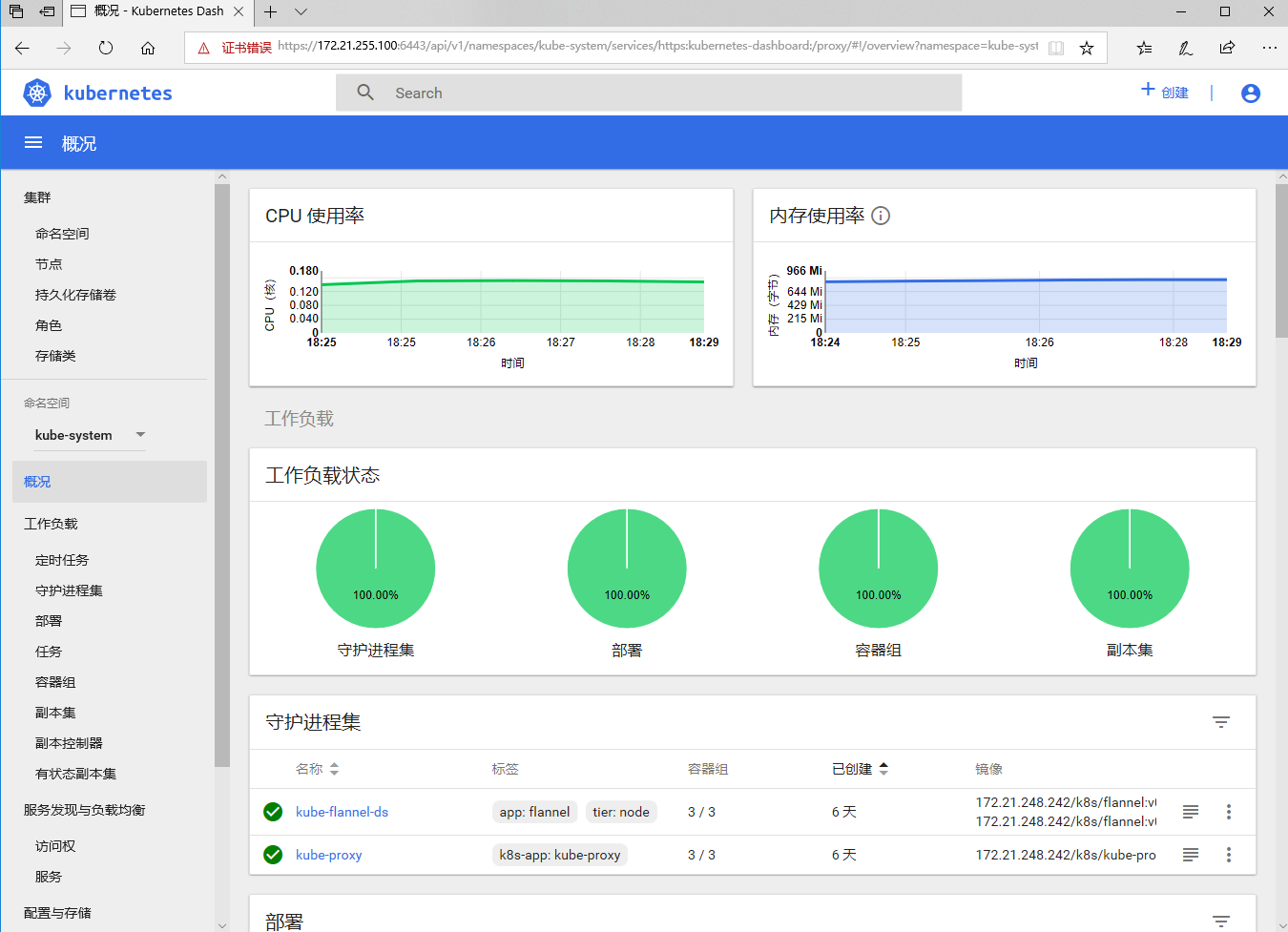

用admin-user的token登入,发现这一次没有报错,完美,如图:

6.2 heapster安装配置

wget https://github.com/kubernetes/heapster/archive/v1.5.2.tar.gz

tar xvzf v1.5.2.tar.gz

cd heapster-1.5.2/

部署文件目录:heapster-1.5.2/deploy/kube-config/influxdb 修改配置文件内的image为本地。 heapster写好了一个kube.sh脚本来导入配置,另外记得要导入rbac/heapster-rbac.yaml,否则heapster会没有权限:

cd /root/heapster/heapster-1.5.2/deploy

[root@bs-ops-test-docker-dev-01 deploy]# pwd

/root/heapster/heapster-1.5.2/deploy

[root@bs-ops-test-docker-dev-01 deploy]# ll

total 12

drwxrwxr-x 2 root root 4096 Mar 20 17:10 docker

drwxrwxr-x 8 root root 4096 Mar 20 17:10 kube-config

-rwxrwxr-x 1 root root 869 Mar 20 17:10 kube.sh

[root@bs-ops-test-docker-dev-01 deploy]# sh kube.sh

Usage: kube.sh {start|stop|restart}

[root@bs-ops-test-docker-dev-01 deploy]# sh kube.sh start

heapster pods have been setup

[root@bs-ops-test-docker-dev-01 deploy]# cd kube-config

[root@bs-ops-test-docker-dev-01 kube-config]# kubectl create -f rbac/heapster-rbac.yaml

clusterrolebinding.rbac.authorization.k8s.io "heapster" created

[root@bs-ops-test-docker-dev-01 deploy]# kubectl get all -n=kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-7997f8864c-5wczb 1/1 Running 0 6d

pod/coredns-7997f8864c-tvqrt 1/1 Running 0 6d

pod/heapster-855c88b45-npft9 1/1 Running 0 1m

pod/kube-apiserver-bs-ops-test-docker-dev-01 1/1 Running 0 6d

pod/kube-apiserver-bs-ops-test-docker-dev-02 1/1 Running 0 6d

pod/kube-controller-manager-bs-ops-test-docker-dev-01 1/1 Running 0 6d

pod/kube-controller-manager-bs-ops-test-docker-dev-02 1/1 Running 0 6d

pod/kube-flannel-ds-b2gss 1/1 Running 0 6d

pod/kube-flannel-ds-n259k 1/1 Running 0 6d

pod/kube-flannel-ds-r2b7k 1/1 Running 0 6d

pod/kube-proxy-4cvhl 1/1 Running 0 6d

pod/kube-proxy-z6dkq 1/1 Running 0 6d

pod/kube-proxy-zmgzm 1/1 Running 0 6d

pod/kube-scheduler-bs-ops-test-docker-dev-01 1/1 Running 0 6d

pod/kube-scheduler-bs-ops-test-docker-dev-02 1/1 Running 0 6d

pod/kubernetes-dashboard-85d6464777-cr6sz 1/1 Running 0 6d

pod/monitoring-grafana-5c5b5656d4-stvkh 1/1 Running 0 1m

pod/monitoring-influxdb-5f7597c95d-5g7g5 1/1 Running 0 1m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/heapster ClusterIP 10.100.16.218 <none> 80/TCP 1m

service/kube-dns ClusterIP 10.96.0.10 </none><none> 53/UDP,53/TCP 6d

service/kubernetes-dashboard ClusterIP 10.101.92.10 </none><none> 443/TCP 6d

service/monitoring-grafana ClusterIP 10.100.199.102 </none><none> 80/TCP 1m

service/monitoring-influxdb ClusterIP 10.107.211.129 </none><none> 8086/TCP 1m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/kube-flannel-ds 3 3 3 3 3 beta.kubernetes.io/arch=amd64 6d

daemonset.apps/kube-proxy 3 3 3 3 3 </none><none> 6d

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/coredns 2 2 2 2 6d

deployment.apps/heapster 1 1 1 1 1m

deployment.apps/kubernetes-dashboard 1 1 1 1 6d

deployment.apps/monitoring-grafana 1 1 1 1 1m

deployment.apps/monitoring-influxdb 1 1 1 1 1m

NAME DESIRED CURRENT READY AGE

replicaset.apps/coredns-7997f8864c 2 2 2 6d

replicaset.apps/heapster-855c88b45 1 1 1 1m

replicaset.apps/kubernetes-dashboard-85d6464777 1 1 1 6d

replicaset.apps/monitoring-grafana-5c5b5656d4 1 1 1 1m

replicaset.apps/monitoring-influxdb-5f7597c95d 1 1 1 1m

效果图如下,可以看到比上面的图多了CPU及内存等监控信息:

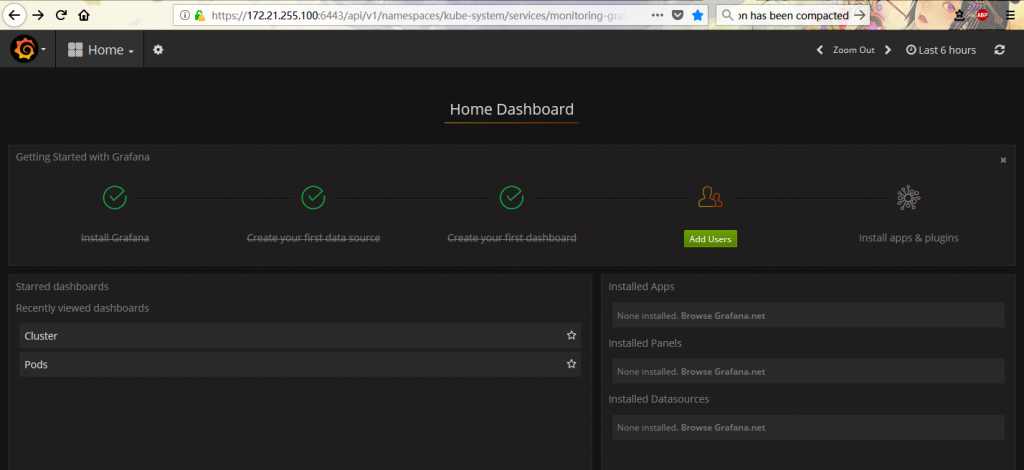

访问 grafana:

访问 grafana:

[root@bs-ops-test-docker-dev-01 kube-config]# kubectl cluster-info

Kubernetes master is running at https://172.21.255.100:6443

Heapster is running at https://172.21.255.100:6443/api/v1/namespaces/kube-system/services/heapster/proxy

KubeDNS is running at https://172.21.255.100:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

monitoring-grafana is running at https://172.21.255.100:6443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

monitoring-influxdb is running at https://172.21.255.100:6443/api/v1/namespaces/kube-system/services/monitoring-influxdb/proxy

用浏览器打开:https://172.21.255.100:6443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy,可看到下图:

里面有K8s集群以及各个pod的监控信息.

里面有K8s集群以及各个pod的监控信息.

好了,k8s的安装及配置就写到这里,后面准备对k8s进行增删节点,自动缩扩容等方面进行测试。